In recent years, remote usability testing of user interactions has flourished. The ability to run tests from a distance has undoubtedly broadened the horizons of many a UXer and strengthened the design of many interfaces. Even though mobile devices continue to proliferate, testing mobile interactions remotely has only recently become technologically possible. We took a closer look at several of the tools and methods currently available for remote mobile testing and put them to the test in a real world usability study. This article discusses our findings and recommendations for practitioners conducting similar tests.

History of Remote Usability Testing

Moderated remote usability testing consists of a usability evaluation where researchers and participants are located in two different geographical areas. The first remote usability evaluations of computer applications and websites were conducted in the late 1990s. These studies were almost exclusively automated and were neither moderated nor observed in real-time. Qualitative remote user testing was also conducted, but the research was asynchronous—users were prompted with pre-formulated questionnaires and researchers reviewed their responses afterward.

Remote user research has come a long way since this time. Researchers can use today’s internet to communicate with participants in a richer and more flexible way than ever before. Web conferencing software and screen sharing tools have made initiating a moderated remote test on a PC as simple as sharing a link.

Pros and Cons of Remote Testing

In deciding whether a remote usability test is right for a particular project, researchers must consider the benefits the methodology affords as well as the drawbacks. Table 1 details this comparison.

Table 1 Benefits and drawbacks of remote usability testing

Benefits of Remote Testing |

Drawbacks of Remote Testing |

| Enhanced Research Validity

+ Improved ecological validity (e.g. user’s own device) + More naturalistic environment; real-world use case |

Reduction in Quality of Data

– Inherent latency in participant/moderator interactions – Difficult to control testing environment (distractions) |

| Lower Cost & Increased Efficiency

+ Less travel and fewer travel-related expenses + Decreased need for lab and/or equipment rental |

Expanded Spectrum of Technical Issues

– Increased reliance on quality of Internet connection – Greater exposure to hardware variability |

| Greater Convenience

+ Ability to conduct global research from one location + No participant travel to and from the lab |

Diminished Participant-Researcher Interaction

– Restricted view of participant body language – Sometimes difficult to establish rapport |

| Expanded Recruitment Capability

+ Increased access to diverse participant sample + Decreased costs may allow for more participants |

Reduced Scope of Research

– Typically limited to software testing – Shorter recommended session duration |

Remote Usability Testing with Mobile Devices

With mobile experiences increasingly dominating the UX field, it seems natural that UX researchers would want to expand their remote usability testing capabilities to mobile devices. However, many of the mobile versions of the tools commonly used in desktop remote testing (for example, GoToMeeting and WebEx) don’t support screen sharing on mobile devices. Similar tools designed specifically for mobile platforms just haven’t been available until fairly recently.

As a result, researchers have traditionally been forced to shoehorn remote functionality into their mobile test protocols. Options were limited to impromptu methods such as resizing a desktop browser to mobile dimensions, or implementing the “laptop hug” technique where users are asked to turn their laptop around and use the built-in web cam to capture their interactions with a mobile device for the researcher to observe.

Unique Challenges of Testing on Mobile Devices

In addition to the limitations of common remote usability testing tools, other unique challenges are inherent in tests with mobile devices. First, operating systems vary widely—and change rapidly—among the mobile devices on the market. Second, the tactile interaction with mobile devices cannot be tracked and captured as readily as long-established mouse and keyboard interactions. Third, mobile devices are, by their nature, wireless, meaning reduced speed and reliability when transferring data. Due to the unique challenges of testing mobile devices, the tools currently available on the market still struggle to meet all the needs of remote mobile usability tests.

Overview of the Tools

In many moderated remote testing scenarios focusing on desktop and laptop PCs, researchers can easily view a live video stream of the participant’s computer screen or conversely, the remotely located participant can control the researcher’s PC from afar. Until recently, neither scenario was possible for testing focused on mobile devices.

In the last decade, improvements in both portable processing architectures and wireless networking protocols have paved the way for consumer-grade mobile screen streaming. As a result, researchers are beginning to gain a means of conducting remote mobile user testing accompanied by the same rich visuals they’ve grown used to on PCs.

Tool configurations

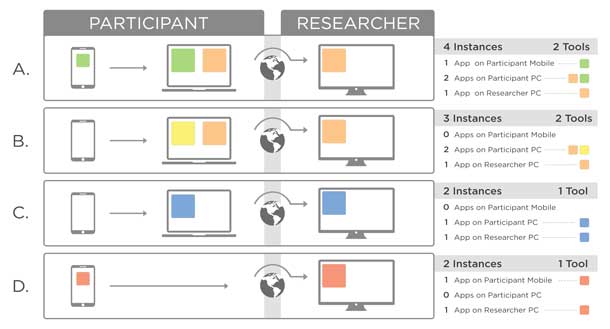

At present, moderated remote testing on mobile devices can be accomplished in a number of ways. These methods represent a variety of software and hardware configurations and are characterized by varying degrees of complexity. Figure 1 depicts four of the most common remote mobile software configurations that exist today.

- Configuration A: First, the participant installs one tool on both their mobile device and computer. This enables them to mirror their mobile screen onto their PC. Then, both the participant and the researcher install one web conferencing tool on each of their PCs. This enables the researcher to see the participant’s mirrored mobile screen shared from the participant’s PC. Example: Mirroring 360.

- Configuration B: First, the participant installs one tool on their PC. The native screen mirroring technology on their mobile device (for example, AirPlay or Google Cast) works with the tool on their PC so they do not need to install an app on their phone. Then, both the participant and the researcher install one web conferencing tool on each of their PCs. This enables the researcher to see the participant’s mirrored mobile screen shared from the participant’s PC. Examples include: Reflector 2, Air Server, X-Mirage

- Configuration C: Both the participant and the researcher install one web conferencing tool on each of their PCs. The native screen mirroring technology on the participant’s mobile device (for example, AirPlay or Google Cast) works with the tool on their PC so they do not need to install an app on their phone. In addition, this tool enables the researcher to see the participant’s mirrored mobile screen shared from the participant’s PC. Example: Zoom

- Configuration D: The participant installs one tool on their mobile device. The researcher installs the same tool on their PC. The tool enables the participant’s mobile screen to be shared directly from their mobile device to the researcher’s PC via the Internet. Examples include: me, Mobizen, TeamViewer, GoToAssist

As researchers, we typically want to make life easy for our test participants. Here we would do that by minimizing the number of downloads and installations for the participant. As a result, having a single instance on the remotely located participant’s end (configurations C and D) are clearly preferable over multiple such installations (configurations A and B). While configuration C does not require the participant to download an app on their mobile device, it does require them to have a computer handy during the session. Configuration D, on the other hand, does not require the participant to use a computer, but requires them to download an app on their mobile device.

Characteristics of the ideal tool

There are many tools that claim to support features that might aid in remote mobile testing. Unfortunately, when evaluated, most of these applications either did not function as described, or functioned in a way that was not helpful for our remote testing purposes.

As we began to sift through the array of software applications available in app stores and on the web, we quickly realized that we needed to come up with a set of criteria to assess the options. The table below summarizes our take on the ideal characteristics of remote usability testing tools for mobile devices

Table 2: Descriptions of the ideal characteristics

| Characteristic | Description |

| Low cost | · The cheaper the better if all else is comparable |

| Easy to use | · Simple to install on participant’s device(s), easy to remove

· Painless for participants to set up and use; not intimidating · Quick and simple to initiate so as to minimize time spent on non-research activities · Allows for remote mobile mirroring without a local computer as an intermediary |

| High performing | · Minimal lag time between participant action and moderator perception

· Can run alongside other applications without impacting experience/performance · Precise, one-to-one mobile screen mirroring, streaming, and capture · Accurate representation of participants’ actions and gestures |

| Feature-rich | · Ability to carry out other vital aspects of research in addition to screen sharing (for example, web conferencing, in-app communication, recording)

· Platform agnostic: fully functional on all major mobile platforms, particularly iOS and Android. · Allows participants to make phone calls while mirroring screen · Protects participant privacy: o Allows participants to remotely control researcher’s mobile device via their own OR, if participant must share their own screen: o Considers participant privacy by clearly warning when mirroring begins o Provides participant with complete control over start and stop of screen sharing o Snoozes device notifications while sharing screen o Shares only one application rather than mirroring the whole screen |

How we evaluated the tools

Of the numerous tools we uncovered during our market survey, we identified six which represented all four of the configuration types and also embodied at least some of the aforementioned ideal characteristics. Based on in-house trial runs, we subjectively rated these six tools across five categories to more easily compare and contrast their strengths and weaknesses. The five categories that encompassed our ideal characteristics included:

- Affordability

- Participant ease-of-use

- Moderator convenience

- Performance and reliability

- Range of features

The rating scale was from 1 to 10, where 1 was the least favorable and 10 was the most favorable. The spider charts below display the results of our evaluation. As the colored fill (for instance, the “web”) expands outward toward each category name, it indicates a more favorable rating for that characteristic. In other words, a tool rated 10 in all categories would have a web that fills the entire graph.

We are not affiliated with any of these tools or their developers, nor are we endorsing any of them. The summaries of each tool were accurate when this research was done in early 2016.

As of June 2016, the TeamViewer app for iOS exists, but does not support mirroring.

As of June 2016, a Join.me app for iOS exists, but does not support mirroring.[:]

Mobizen and Team Viewer each had strengths in affordability and ease of use, respectively. However, Join.me and Zoom notably fared the best on the five dimensions overall.

Having done this analysis, when it came time to conduct an actual study, we had the information we needed to select the right tool.

Case Study with a Federal Government Client

Ultimately, we can test tools until we run out of tools to test (trust us, we have). However, we also wanted to determine how they actually work with real participants, real project requirements, and real prototypes to produce real data. We had the opportunity to run a remote mobile usability test with a federal government client to further validate our findings.

Our client was interested in testing an early prototype of their newly redesigned responsive website. As a federal government site, it was important to include a mix of participants from geographies across the U.S. The participants also had a specialized skill set, meaning recruiting would be a challenge. As a result, we proposed using these newly researched tools to remotely capture feedback and observe natural interactions on the mobile version of the prototype.

We chose two tools to conduct the study: Zoom (for participants with iOS devices) and Join.me (for participants with Android devices). We chose these tools because, as demonstrated by our tool analysis, they met our needs and were the most reliable and robust of the tools we tested for each platform.

To minimize the possibility of technical difficulties during the study itself, we walked participants through the installation of the tools and demonstrated the process to them in the days prior to the session. This time allowed us to address any issues with firewalls and network permissions that are bound to come up when working with web conferencing tools.

Using this method, we successfully recruited seven participants to test the mobile version of the prototype (as well as eight participants to test the desktop version). We pre-tested the setup with three participants whom we ultimately had to transfer to the desktop testing group due to technical issues with their mobile devices. Dealing with these issues and changes during the week prior to the study ensured that the actual data collection went smoothly.

Lessons Learned About Remote Mobile Testing

Not surprisingly, we learned a lot from this first real world usability study using these methods and tools.

- Planning ahead is key. Testing the software setup with the participants prior to their scheduled session alleviated a great deal of stress during an already stressful few days of data collection. For example, our experience was that AirPlay does not work on enterprise networks. We were able to address this issue well in advance of the study.

- Practice makes perfect. Becoming intimately familiar with the tools to be used during the session allows you to more easily troubleshoot any issues that may arise. In particular, becoming familiar with what the participant sees on their end can be useful.

- Always have a backup. When the technical issues arise, it’s always good to have a backup. We knew that if the phone screen sharing didn’t work during the session, we could quickly relegate our testing method to one of the less optimal, but still valid mobile testing methods, such as re-sizing the browser to a mobile device-sized screen. If Zoom or Join.me didn’t work at all, we knew we could revert to our more reliable and commonly used tool for sharing desktops remotely, GoToMeeting. Fortunately, we didn’t need to use either of these options in our study.

- Put participants at ease. Give participants a verbal overview of the process and walk them through it on the phone, rather than sending them a list of complex steps for them to complete on their own.

- Tailor recruiting. By limiting recruiting to either iOS or Android (not both), you will only need to support one screen sharing tool. In addition, recruit participants who already possess basic mobile device interaction skills, such as being able to switch from one app to another. These tech savvy participants may be more representative of the types of users who would be using the product you are testing.

The Future of Remote Testing of Mobile Devices

While we’re optimistic about the future of remote mobile usability testing, there is certainly room for improvement in the tools currently available. Many of the tools mentioned in our analysis are relatively new, and most were not developed specifically for use in user testing. As such, these technologies have a long way to go before they meet the specifications of our “ideal tool.”

To our knowledge, certain characteristics have yet to be fulfilled by any tool on the market. In particular, we have yet to find an adequate means of allowing a participant to control the researcher’s mobile device from their own mobile device, nor a tool that screen shares only a single app on the user’s phone or tablet, rather than the whole screen. Finally, and perhaps most importantly, we have yet to find a tool that works reliably with both Android and iOS, not to mention other platforms.

Nevertheless, mobile devices certainly aren’t going anywhere and demand for better mobile experiences will only increase. As technology improves and the need for more robust tools is recognized, it’s our belief that testing mobile devices will only get easier.

Author’s Note: The information contained in this article reflects the state of the tools as reviewed when this article was written. Since that time, the technologies presented have evolved, and will likely continue to do so.

Of particular note, one of the tools discussed, Zoom, has added new mirroring capabilities for Android devices. Although a Zoom app for Android was available when we reviewed the tools in early 2016, screen mirroring from Android devices was not supported. Therefore, this functionality is not reflected in its ratings.

We urge readers to be conscious of the rapidly changing state of modern technologies, and to be aware of the potential for new developments in all of the tools discussed.

[bluebox]

More Reading

A Brief History of Usability by Jeff Sauro, MeasuringU, February 11, 2013

Laptop Hugging, Ethnio Blog, October 29, 2011

Internet communication and qualitative research: A handbook for researching online by Chris Mann and Fiona Stewart ,Sage, 2000.

[/bluebox]

![[:en]Mirroring360’s highest rating: Affordability; Lowest ratings: Participant ease of use and Range of Features[:]](https://uxpamagazine.org/wp-content/uploads/2016/07/16-3-Douglas-Fig2-mirroring360-radar-1-150x150.jpg)

![[:en]Reflector 2’s highest rating: Affordability; Lowest ratings: Participant ease of use and Range of Features[:]](https://uxpamagazine.org/wp-content/uploads/2016/07/16-3-Douglas-Fig3-reflector2-radar-1-150x150.jpg)

![[:en]Team Viewer’s highest rating: Participant ease of use; Lowest rating: Affordability[:]](https://uxpamagazine.org/wp-content/uploads/2016/07/16-3-Douglas-Fig4-teamviewer-radar-1-150x150.jpg)

![[:en]Mobizen’s highest rating: Affordability; Lowest rating: Range of Features[:]](https://uxpamagazine.org/wp-content/uploads/2016/07/16-3-Douglas-Fig5-mobizen-radar-1-150x150.jpg)

![[:en]Join.me’s highest ratings: Performance and Moderator Convenience; Lowest rating: Affordability[:]](https://uxpamagazine.org/wp-content/uploads/2016/07/16-3-Douglas-Fig6-joinme-radar-1-150x150.jpg)

![[:en]Zoom’s highest ratings: Range of Features and Moderator Convenience; Lowest rating: Affordability[:]](https://uxpamagazine.org/wp-content/uploads/2016/07/16-3-Douglas-Fig7-zoom-radar-2-1-150x150.png)