ToT, NPS, SUS, SUPR-Q…$%&! There is an alphabet soup of metrics used by UX professionals in most major enterprises.

However, while most UXers spend considerable time developing and refining methods to capture the richness of the user’s perspective, there is considerably less time spent learning and practicing how to make a strong business case for their findings.

We’ve experienced this vividly and have developed a framework to present an updated model of the various metrics collected within an organization to help UXers make a precise and defensible case for their work.

Quantifying UX with a 21st Century Metrics Model

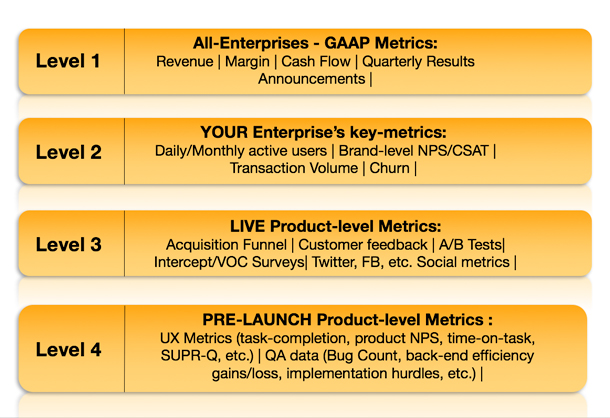

We’ve identified four levels of metrics:

Level 1 (L1) are Generally Accepted Accounting Principles (GAAP). They are gathered by nearly all American enterprises, with corresponding International Financial Reporting Standards (IFRS) around the world. These are rooted in rules for financial disclosure created domestically by the US Securities and Exchange Commission (SEC), and internationally by the International Accounting Standards Board, and emerged from the practices of industrial age companies of the mid-1900s. Most, if not all, enterprises that seek to disclose their financials in an official capacity hold GAAP or IFRS as a baseline. As of 2007, any company compliant with IFRS is also compliant with GAAP, and the SEC has stated its intention to move over to IFRS.

While L1 metrics have been the focus of most companies for decades (for example, revenue or stock price), L2-L4 introduce tangible ways for employees to think about, rally around, and optimize the L1 metrics.

In our view, the holy grail for UXers is to show an L4-L1 relationship. To illustrate this point, we chose two powerful examples.

Increasing online sales for a major e-commerce company

Situation

A large e-commerce company wanted to increase the number of online “bundles” sold to new and current customers.

These bundles could contain a wide mix of products and were generally set to repeatedly ship to customers at a range of times, providing customer value and a predictable revenue stream for this business.

The repeated sales also allowed the company to pre-purchase its products in bulk and keep them in-warehouse for minimal amounts of time, reducing both risk and operating costs.

Increasing the number of bundles sold and improving several L1 metrics—including revenue, stock-on-hand, and margin-on-product—was especially important as the company had pre-committed to a bundling strategy and was positioning itself for an acquisition.

Problem

The online bundle-creation flow was plagued with high check-out times for customers (L3), negative consumer feedback via the Contact Us page (L3), and low bundle sales on the live-site analytics (L2). Certain items could be bundled, while others could not, and depending on the frequency of shipment, some bundles were charged as one but shipped in three or more packages. Even worse, those users that were able to create a bundle often canceled since what they received was different from what they’d expected upon checkout (L3).

While the client’s lead researcher had some vague idea of each of the metrics covered within this framework, we presented an early stage version of the framework to help the team visualize the connection between metrics and focus their efforts.

Research solution

Using their live-site feedback and a series of heuristic evaluations, the client created three new designs (A, B, C) and decided to test them pre-launch along three L4 dimensions: time-on-task, ability to complete the bundle flow, and ability to explain the bundle concept to a friend.

With a task-based study design and a large sample size, the team decided that whichever design performed best along these three dimensions would be the “winner.” After careful statistical analysis, they found that design A led to significantly higher L4 metrics (time-on-task, bundle creation, and bundle explanation) when compared with the other designs. People were completing the flow quickly and understanding what they had done.

Some attributes of the design included examples of popular bundle options to anchor and explain the flow, and clear copy with corresponding visuals to illustrate each example.

The client’s lead researcher made the case to her stakeholders that design A, if implemented, would reduce the L3 live-site metrics of customer complaints and time-on-task, leading to a higher L2 of bundle completion, and positively affecting the L1 of revenue, margins, and operational costs.

The stakeholders greenlighted the new flow. The engineering team, excited by the improvements, prioritized the changes, which ultimately led to a long-term increase in L2 bundle-sales, increases in L1 revenue and margin, and a reduction in L1 operational costs.

The lead UX researcher was promoted within the organization, presented the report at the company all-hands meeting, and led a massive initiative around focused product measurement and enhancement within the organization.

The company, showing digital innovation, growth, and solid financial know-how with its new bundle-model, was acquired 10 months after the completion of the study. The acquirer noted the culture of digital measurement and optimization, as well as the bundle concept, as key to its buying the company.

As the lead researcher had a mixed business and research background and reported to a seasoned product director within an organization that was focused on the bundle creation flow, this was an environment ripe for this type of structured business framework.

Now, we’ll review a slightly different use case.

Connecting NPS (L4) to increased revenue (L1):

Situation

A large health insurance provider was testing four redesigns of the flow that members use during open enrollment to learn about and select their health benefits.

Problem

The company’s product team received negative feedback from users about the information presented in the current flow and were planning to test three new options along a slew of L4 KPIs, spending weeks on data formatting and analysis. L4 KPIs included the ability to explain the selected plan to a friend, the users’ expected vs. actual ease of use of the tool, understanding of plans selected, and views on the tools’ appropriateness for the workplace.

The product team hadn’t given a single thought to connecting these KPIs to any other higher-order set of metrics to make a case for their research and design suggestions within the larger organization.

Research solution

We held a strategy session in which we showed the team a version of this framework, and encouraged them to think in a more structured way about their place within the massive enterprise.

They went back to their organization and asked what metrics their managers—and managers’ managers (up to the VP of product)—generally used to made decisions for this product line. They had never asked these questions before. The key metric ended up being an L2 of brand-level NPS of the buyers, not the users, of the health insurance selector tool. (Yes, it’s a mouthful, and it’s also measured by a survey designed outside of the product organization.)

The product team figured that this key L2 of buyers’ brand-level NPS could be informed by a product-level buyer’s NPS (L4), which they could gather and analyze through their research study. In turn, they reduced many of their other KPIs and changed their user group from just users, to users and buyers, and budgeted for more generative research around the buyers for use in future designs.

They led a large sample, task-based study, and carefully compared the four designs based on the users’ ability to explain benefits to a friend, the buyers’ views on appropriateness for the workplace, and the NPS score.

This led to a clear winner among the four designs. We created a report that connected L4 product-level NPS to L2 brand-level NPS to L1 revenue. The report went up the food chain, and the product changes were prioritized by the engineering team. A few months after implementation, the product line enjoyed a 20% lift in revenue.

The product team was asked to present their methods to the entire organization. In doing so, they generated a long-term plan to shift the research culture to focus on the metrics execs care about, and worked to create other exec-level metrics beyond product and brand-level NPS.

Using the Metrics Model in Your Organization

These are just two examples of the application of this framework in a business context. We hope your minds are spurring with dozens more.

As an exercise, we’d encourage you to play around with the framework. For practice, pick a company—let’s say an online video company—then tell a story: for example, a decrease in time-finding-favorite-TV-show on design A in the pre-launch study (L4) holds true when design A is run on the live-site (L3), which links to the L2 metric of increased program viewership, and ties directly to the L1 metric of more revenue.

We hope you use this framework in your day-to-day work to:

- Learn about what business-metrics are key within your organization

- Visualize the vaguely linear relationship among UX and other business metrics

- Make informed decisions about the metrics you gather

- Make a precise and defensible case about how your work affects the key business metrics; and/or

- Earn influence to shape the prioritization of these metrics, and lead more high-quality, impactful research within your organization

We see this as an ongoing conversation and are excited to hear from you.

[greybox]

Description of Figure 2: Enterprise metrics model

- Level 1: All Enterprises – GAAP Metrics: Revenue | Margin | Cash Flow | Quarterly Results Announcements

- Level 2: Your Enterprise’s key metrics – Daily/Monthly active users | NPS/CSAT | Transaction Volume | Lead-revenue ratio | Churn

- Level 3: Live Product Metrics – Customer Acquisition Funnel | A-B Tests | Intercept/VOC Surveys | Off-site Social Metrics

- Level 4: Pre-Launch Metrics – QA data (Bug count, back-end efficiency gains/loss, implementation hurdles, etc.) | UX Metrics (Ease-of-use, NPS, SUPR-Q, etc.)

[/greybox]