As practitioners, we solve problems and adapt to circumstances. Usually, we can use common tools and methods to solve the problems we are facing, but every once in a while we are faced with a challenge that requires us to think outside the box.

I was recently preparing to run a usability study on an Internet of things (IoT) product that was to be used in the home on a daily basis. In this study, participants would receive a prototype of the product in the mail and be required to perform a series of tasks each day at a certain point in their daily routine, for seven consecutive days, and then provide feedback about their experience immediately after. This was different from any usability study I had done in the past for a few reasons:

- It was an unmoderated study that required performing tasks with a physical product instead of an interface.

- I had no control over when the participant would perform the tasks each day.

- The study was going to take place over several days, and participant feedback was to be collected each day.

With the constraints presented by this study, it became clear that typical methods of running an unmoderated study just were not going to cover all of the challenges presented by this study.

To successfully run this study, I needed a way to confirm that the tasks were being done by participants each day, but without a digital product to capture interactions, I needed to find a new method to record this. I also needed a way to send different surveys to participants each day of the study but needed to ensure it was sent for the correct day and only after they indicated they completed the daily tasks. I determined that the sending of surveys and recording the confirmation of the task completion would be best handled by an automated system.

With so many options for automated messaging services, I narrowed my search to systems that could do the following:

- Send messages once triggered by the participant’s actions.

- Be triggered over and over for several days without the messages getting mixed up.

- Be simple and convenient for the participants to use as to not distract from the product they were testing.

Of all the methods researched, chatbots were found to be best because they operate based on triggers from a person, they allow for a conversation or flow of actions to take place, and they can repeat actions day after day.

Initial Study Planning

Before a chatbot can be built, a plan around how it should behave and what it would be used to accomplish needed to be established.

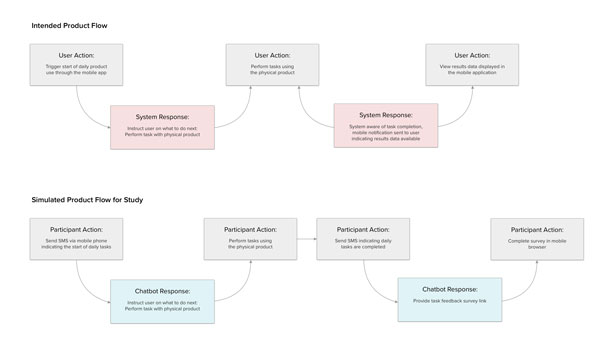

Being that this project was a usability study, an initial test plan was created that included all of the details from the goals of the study to how it would be run. A flow diagram of the daily participant tasks was crafted based on the intended use of the product (see Figure 1). Establishing this flow was critical to the study because it determined what actions would be required by the participant and what was considered successful completion of the tasks for the day. It was also critical for creating the chatbot because it led to identifying the moments where moderation by the system was needed to move the participant along, and indicated when the tasks were completed for the day so that could be communicated by the chatbot.

As part of the test plan, the method of communication with participants needed to be determined. A powerful benefit to working with chatbots is they can be placed into any type of messaging service. This provides the flexibility to use any communication platform that participants are already familiar with or that fits the use case of the product being tested.

The product tested in my study had a mobile application component that was excluded from the study. However, by using a chatbot and placing it in an SMS (short message service) messaging platform and including steps in the task flow that required them to interact via SMS, I was able to incorporate a mobile component to the study. Also, since SMS messaging is so widely used, participants had no issues performing this part of the daily task. Even though SMS messaging was used in this example, any messaging platform could utilize chatbots in a similar way.

Since this study was to run for seven days and have seven different surveys, it required a deep level of planning that led to developing a “schedule” for each day of the study that contained the following:

- Steps for the Daily Participant Tasks: The series of actions the participant would perform for the day. These steps would be the same each day and were defined by the flow as previously described and shown in Figure 1. Repeating the same actions created a pattern where participants could easily learn what they needed to do and get into the habit of performing the steps.

- Survey Questions: The questions that participants would be asked on each day of the study. Every day had a unique survey with specific questions. The surveys were created online and could be accessed via a web link.

- Participant Trigger Phrases: The messages sent from participants via SMS that would trigger a specific bot flow. There were two messages for each day of the study: one to indicate the start of the tasks and one to indicate the completion of the tasks. Trigger phrases were limited to two words to remain simple and straightforward for the participant, reducing the amount of possible errors.

- System Responses: The messages that would be sent to participants via SMS after each incoming trigger phrase was received. These were the responses used when configuring the chatbot dialog, and they were intended to guide the participant in what task to perform next. Response messages were the same each day, but each included the specific number of the day in the study as a way of providing reassurance that they were in the correct bot flow. Additionally, the final response message for a given day told the participants that they were done for the day so it was clear they had fulfilled the daily tasks.

Building the Chatbot

A variety of chatbot platforms are available today, all offering a wide range of capabilities. Choosing the best one depends on the specific needs of the project. Identifying criteria that fit the needs of the project helps to narrow the options when researching different platforms. The platform I chose to use for this study was based on my preference to have a very simple and visual UI so I could build quickly without a big learning curve. It also provided additional features that made it easy to integrate with an SMS number and view the message conversation history for created chatbots.

Chatbot Structure

Chatbot conversation paths are essentially flow diagrams; they can be anything from a single, linear path to a complex tree structure. Typically, with a complex chatbot flow, if you were to go down a conversation path and want to change the topic you were discussing, you could step back or even re-enter the flow from the beginning and choose a different path. However, doing this may lead to going down paths you have been down before and re-entering the same prompts multiple times to find the correct path.

While this may be acceptable for chat experiences, it poses risks for a situation where the goal is to avoid having the same message sent twice or responses being sent out of the intended order. When using this for a usability study, the most important thing is for the participant to not lose their place in the flow, or have the ability to accidentally travel down the wrong path because it could lead to them losing track of their location or receiving an incorrect survey.

For the chatbot in this study, I decided to go with a simple, independent conversation flow for each of the days of the study (see Figure 2), rather than combining them into one complex structure with seven different paths. I felt it was better to have the participant enter a new bot flow at the beginning of each day and exit at the end of the tasks for that day because it presented the lowest risk for the participant to end up on the wrong path.

Flows and Triggers

Chatbot triggers are words or phrases that when received by a chatbot, instructs it to enter a specific dialog flow. Each individual flow is configured with a unique trigger word, so when that trigger word is received, the bot knows which to enter into. When using chatbots for a study, using triggers that are simple and based on tasks the participant is performing makes it easier for the participant to remember because it serves as a confirmation of the action.

For this study, a single chatbot conversation flow was built based on the daily task flow (see Figure 3) and was then replicated for each of the seven days of the study. Since the actions were to be the same each day, the response text for each day was changed to reflect the corresponding flow that it was in, and the corresponding survey links were added, as well. Despite being the same general flow, each were accessed independently because of the unique trigger words that were assigned. For example, the trigger word for the first day was “Day One” and triggered the chatbot to send responses specific to the first day of the study.

Connecting the Chatbot to a Messaging Service

The final step to begin using a chatbot is to connect it with a messaging platform. This is achieved by connecting a message platform that is compatible with the chatbot platform via API keys. Once connected, messages can then be sent, and chatbot messages can be received and responses returned.

The SMS messaging platform used in this project allowed for outbound messaging to mobile numbers, which was utilized to initiate the SMS messaging chain with participants starting with a welcome message. A welcome message such as this can serve two purposes—to get participants accustomed to using the message thread for interactions and to eliminate the risk of them messaging the wrong number.

Study Preparation

Once set up, a pilot study is the best way to test the chatbot conversation paths over an extended period of time and to ensure participants understand the required tasks and chatbot responses.

Even with a successful pilot study, it is helpful to have a plan for monitoring the interactions throughout the study such as the following:

- Forward Incoming Messages: Some chatbot platforms support incoming messages received by the chatbot to be forwarded as notifications to other mobile numbers or even to Slack channels. Any time the chatbot receives a new message, a notification is sent allowing the messages to be monitored in real-time.

- Monitor Messages Through the Chatbot Platform: Some chatbot platforms provide the ability to view full message conversations between a participant and the chatbot. Additional functionality such as being able to pause the chatbot responses and the option to type and send messages manually, may also be available. With these capabilities, the conversations can be monitored remotely, and any issues could be handled directly in the SMS message chain rather than contacting the participant through another channel and possibly interrupt their experience.

Providing Instruction for Participants

Since this was a remote study, participants needed to be provided with enough instruction for them to go through the study on their own. To do so, the product packages mailed to each participant contained instruction cards with detailed steps on how they were to complete the study. It also included a list of the different trigger words they needed to send via SMS each day and at what points in the flow of tasks to send them.

Ideal Applications

Some might consider the amount of preparation for this study to be excessive or off-task from a typical usability study, but I argue getting the response collection right was necessary to get the best results possible. With a simple way to send daily surveys, I was able to keep the participant focused on the tasks in the study and could ensure the correct surveys were sent at the ideal time. The simulated mobile interaction was also achieved thanks to this prep work, allowing participants to experience a more realistic scenario.

Being this took some effort to set up, this method may not be ideal for just any usability study. However, it is a very useful option in situations where the goal is to achieve the following:

- Automation: In situations where there is a lot of back-and-forth messaging or sending of surveys, as in my case, this option works great because the chatbot can listen and send specific messages at the appropriate times. It is also great for repetitive scenarios, such as performing the same action each day for several days.

- Minimal Contact with Participant: For situations where you cannot or do not want to have a person interacting with a participant, this is a great option to have the system handle the interaction. Also having the ability to intervene in the conversation is helpful if there is a chance the participant responses may be unpredictable and cannot be programmed into the chatbot flow.

Conclusion

In the end, all of the work that went into researching and setting up this chatbot interaction really paid off when it came time for the study. The task flow was simple, and participants picked up on the pattern right away. We had very little issues after the first day.

Planning out the study schedule ahead of time made it easy to build the chatbot dialog flows without missing any details. The instruction cards provided participants with all the information they needed, and all participants completed all of the required tasks and surveys. After the first few days of the study, I was able to step back and let the study run without needing to closely monitor the messaging.