Overview

Machine learning (ML) practitioners face a growing problem: Products and services increasingly generate and rely on larger, more complex datasets. As datasets grow in breadth and volume, the ML models built on them are increasing in complexity. But as ML model complexity grows, they become more and more opaque.

Without clear explanations, end users are less likely to trust and adopt ML-based technologies. Without a means of understanding model decision making, business stakeholders have a difficult time assessing the value and risks associated with launching a new ML-based product. Without insights into why an ML application is behaving in a certain way, application developers have a harder time troubleshooting issues, and ML scientists have a more difficult time assessing their models for fairness and bias. To further complicate an already challenging problem, the audiences for ML model explanations come from varied backgrounds, have different levels of experience with statistics and mathematical reasoning, and are subject to cognitive biases. They also rely on ML and explainable artificial intelligence (XAI) in a variety of contexts for different tasks. Providing human understandable explanations for predictions made by complex ML models is undoubtedly a wicked problem.

In this article, I delineate the basics of XAI and describe popular methods and techniques. Then, I describe the current challenges facing the field and how UX can advocate for better experiences in ML-driven products.

What Is Explainable AI?

There are a variety of published definitions for XAI, but in its simplest form, it is the endeavor to make an ML model more understandable to humans.

| “Given an audience, an explainable AI is one that produces details or reasons to make its functioning clear or easy to understand.” –Alejandro Barredo Arrieta |

Depending on the context and needs of the end users, they may ultimately rely on XAI to answer a number of questions, such as, “How does it work?” and “What mistakes can it make?” and “Why did it just do that?”

XAI literature uses these common terms to describe human understanding of ML processes.

- Interpretability: A passive characteristic of an ML system. If an ML system is interpretable, you can describe the ML process in human understandable terms.

- Explainability: A technique, procedure, or interface between humans and an ML system that makes it understandable.

- Transparency: A model is considered transparent if, by itself, it is understandable. A model is transparent when a human can understand its function without any need for post-hoc explanation.

- Opaque: The opposite of a transparent model is an opaque model. Opaque models are not readily understood by humans. To be interpretable, they require post-hoc explanations.

- Comprehensibility: The ability of a learning algorithm to represent its learned knowledge in a human understandable fashion.

The terms “interpretable AI” and “explainable AI” are sometimes used interchangeably. Alejandro Barredo Arrieta, however, draws a clear distinction between the two terms. An interpretable AI is one that is understandable to humans. Explainable AI is an interface between humans and ML models that attempts to make the ML model interpretable.

When XAI Is Required

Some types of ML models are transparent and are, by themselves, interpretable. Some types of transparent models include:

- Linear/logistic regression

- Decision trees

- K-nearest neighbors

- Rule-based learners

Opaque models that require the addition of post-hoc explainability methods include:

- Tree ensembles

- Deep neural networks (DNNs)

- Reinforcement earners and agents

XAI Techniques and Methods

These are the major types of explanation techniques ML scientists use to develop XAI methods:

- Explanation by simplification provides explanation through rule extraction and distillation.

- Feature relevance explanation provides explanation through ranking or measuring the influence each feature has on a prediction output.

- Visual explanation provides explanation through visual representation of predictions.

- Explanation by concept provides explanation through concepts. Concepts could be user defined (for example, stripes or spots in image data).

- Explanation by example provides explanations by analogy though surfacing proponents or opponents to the data.

Explainability methods can be model agnostic; they can be applied to any type of model. Explainability methods can also be model specific; they are designed to work with a specific type of model. Table 1 describes established XAI methods broken out by technique and model specificity.

Table 1: XAI Methods by Technique and Model Specificity

| Model agnostic | Model specific | |

| Explanation by simplification | Local Interpretable Model-Agnostic Explanations (LIME), G-REX | Simplified Tree Ensemble Learner (STEL), DeepRED |

| Feature relevance explanation | Shapley values, Automatic Structure Identification (ASTRID) | Mean Decrease Accuracy (MDA), Mean Increase Error (MIE), DeepLIFT |

| Visual explanation | Individual Conditional Expectation (ICE), Partial Dependence Plots (PDPs) | Layer-Wise Relevance (LRP), Gradient-Weighted Class Activation Map (Grad-CAM) |

| Explanation by concept | Testing with Concept Activation Vectors (TCAV) | |

| Explanation by example | Example-based explanations |

Local, Cohort, and Global Explanations

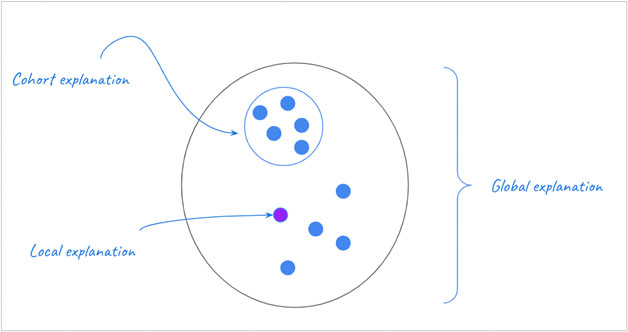

XAI methods also provide explanations at different levels of granularity. Figure 1 shows a set of predictions, and what local, cohort, and global XAI would provide explanations for.

- Local explanation provides an explanation for a single prediction.

- Cohort explanation provides an explanation for a cohort or subset of predictions.

- Global explanation provides an explanation for all predictions, or the model decision making process itself.

Why XAI Is Important

Opaque AI models are frequently employed in industries ranging from retail to finance to healthcare. There are benefits with these types of models, including the ability to apply ML to nuanced domains with potentially increased prediction accuracy. Without the ability to understand its decision making or present a human-understandable explanation, however, ML-based technologies include several pitfalls.

- Illegitimate conclusions: There are instances when a model can arrive at an illegitimate conclusion, such as when there are deficiencies in the training data or when input data skews or shifts. Without the ability to know how a conclusion is made, it is difficult to identify if an illegitimate conclusion has been made.

- Bias and fairness: Models can arrive at unfair, discriminatory, or biased decisions. Without a means of understanding the underlying decision making, these issues are difficult to assess.

- Model monitoring and optimizing (MLOps): Similar to the first two points, without XAI, model builders and operators struggle to identify critical issues and troubleshoot them.

- Human use, trust, and adoption of AI: Generally, we are reluctant to adopt or trust technologies we do not understand. In cases in which technology is used to make critical decisions, we need explanations to effectively execute our own judgment.

- Regulations and rights: There are existing and proposed regulations to guarantee consumers have the right to know the reason behind algorithmic decision making in high-risk applications. Also, regulators will need model explanations to ensure that regulations and standards are met.

| “The danger is in creating and using decisions that are not justifiable, legitimate, or that simply do not allow obtaining detailed explanations of their behavior.” —Alejandro Barredo Arrieta |

XAI Is for Humans

One thing to keep in mind is that XAI is not just a tool, but also an interface between an AI model and a human. An XAI method could be accurate yet still poor quality if it does not suffice the needs and context of the end user.

| “The property of ‘being an explanation’ is not a property of statements, it is an interaction. What counts as an explanation depends on what the learner/user needs, what knowledge the user(learner) already has, and especially the user’s goals.” —Robert R. HoffmanMetrics for Explainable AI: Challenges and Prospects |

In the following sections, I outline how different types of users have different XAI needs and the importance of being mindful of human cognitive biases.

Different Humans, Different Needs

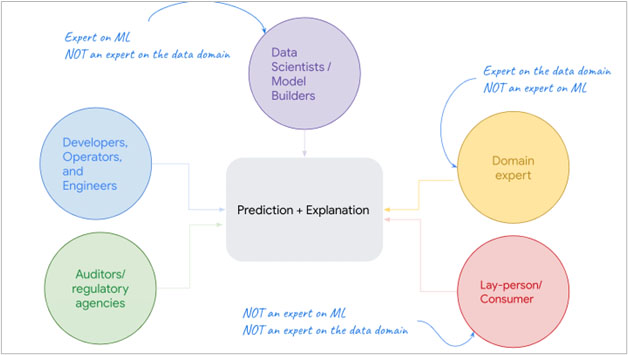

Consider Figure 2 which shows potential audiences for predictions and explanations. In Table 2, we see a breakdown of their needs and expertise in a digital pathology scenario.

Table 2. Breakdown of Audience, XAI Need, and Relevant Expertise in a Digital Pathology Scenario

| Role | Scenario | XAI need | Relevant expertise |

| Developers, operators, and engineers | An engineer developing a digital pathology system. | Needs to monitor and troubleshoot systems. Ensure predictions offered fall within a Service Level Object. | Expert in the specific ML system but may have little to no experience with the dataset domain. |

| Data scientists / Model builders | A data scientist is training a model to be used in a digital pathology tool. | Needs to configure and train a model that meets the accuracy requirements of the application. | Expert in ML models and how to build them. Little to no experience with the dataset domain. |

| Domain expert | A pathologist using a digital pathology system with their patients. | Needs to verify, scrutinize, and certify results. Needs to articulate results to patients. Wants to gain additional knowledge. | Expert in the respective domain and responsible for the decision at hand, but little to no expertise in ML processes. |

| Layperson/ End-user | A patient who had a biopsy tested with a digital pathology system. | Needs to understand diagnostic results and agree to next steps for their care. | May have little to no expertise in ML systems, the dataset, or statistical representations. |

| Auditors and regulatory agencies | A regulator reviewing a new digital pathology tool. | Needs to assess diagnostic efficacy of the tool and assess whether its meeting regulatory requirements. | Expert in experimentation, statistics, diagnostics, and regulatory requirements. Significant expertise on related datasets. |

Table 2 shows that each type of consumer will have different levels of expertise with ML systems and the underlying dataset. They will also be using XAI to meet different needs under different contexts. Depending on who the user is, the explanation may need to account for different domain expertise, cognitive abilities, and context of use.

| “Generally, [an explanation is meaningful] if a user can understand the explanation, and/or it is useful to complete a task. This principle does not imply that the explanation is one size fits all. Multiple groups of users for a system may require different explanations. The Meaningful principle allows for explanations which are tailored to each of the user groups.” —P. Jonathon Phillips |

Human Biases

Not only do different audiences for XAI have distinct and different needs, but they are also all still subject to natural human biases.

Confirmation Bias

Confirmation bias is a well-documented phenomenon of people seeking and favoring information that supports their beliefs. In terms of ML and XAI, confirmation bias can lead to both unjustified trust and mistrust of a system. If an ML system presents predictions and explanations in-line with a user’s preconceived notions, the end user is at risk of “over trusting” the prediction. If the ML system presents predictions and explanations counter to a user’s preconceived notions, the end user risks mistrusting the prediction.

| “People always have some mixture of justified and unjustified trust, and justified and unjustified mistrust in computer systems… [XAI design] must be aimed at maintaining an appropriate and context-dependent expectation.” —Robert R. Hoffman |

Unjustified Trust/Over Trust

One of our goals in designing XAI systems is that end users can accurately assess the limitations of the given ML model; that is, we want them to trust the ML prediction the correct amount. However, studies have shown that end users may have a higher degree of trust than they should (or “over trust”) when explanations are presented in different formats. In particular, according to Upol Ehsan users over trust a prediction when they are given a mathematical explanation.

| “For example, a study accepted at the 2020 ACM on Human Computer Interaction discovered that explanations could create a false sense of security and over-trust in AI … One of the robots explained the ‘why’ behind its actions in plain English, providing a rationale. Another robot stated its actions without justification (e.g., ‘I will move right’), while a third only gave numerical values describing its current state. The researchers found that participants in both groups tended to place ‘unwarranted’ faith in numbers. For example, the AI group participants often ascribed more value to mathematical representations than was justified, while the non-AI group participants believed the numbers signaled intelligence—even if they couldn’t understand the meaning.” —Kyle Wiggers |

Knowledge Shields

In the learning sciences, according to Paul J. Feltovich and Robert R. Hoffman, the term “knowledge shields” refers to arguments and rationalizations students make that enable them to preserve overly simplified understandings of complex phenomena. (For example, students may have an overly simplified understanding of how white blood cells work, which prevents them from learning more about the complex cell interactions in human immune systems.) An educator’s role is to help students recognize knowledge shields and provide instructional support to overcome them. Similarly, ML model end users may have knowledge shields preventing them from understanding and learning from ML models representing complex phenomena.

UX Plus XAI

| “Consciously or not, deliberately or inadvertently, societies choose structures for technologies that influence how people are going to work, communicate, travel, consume, and so forth over a very long time.” —Langdon Winner |

XAI provides opportunities to improve ML application experiences. With XAI techniques, we can present consumers with more windows into complex ML systems’ behaviors and predictions. But XAI also presents a new set of challenges. Product teams need to design ML explanations to meet different users’ needs in different contexts. ML applications teams must design explanations such that consumers walk away with accurate understanding of the system.

UX professionals should ensure they assess the following in any explanation design:

- Understandability: Does the XAI method provide explanations in human-readable terms with sufficient detail to be understandable to the intended end users?

- Satisfaction: Does the XAI method provide explanations such that end users feel that they understand the AI system and are satisfied?

- Utility: Does the XAI method provide explanations such that end users can make decisions and take further action on the prediction?

- Trustworthiness: After interacting with the explanation, do end users trust the AI model prediction to an appropriate degree?

As we consider how and when UX should get involved in the design of ML applications and their explanations, it must be right from the beginning. Langdon Winner said in 1980, “By far the greatest latitude of choice exists the very first time a particular instrument, system, or technique is introduced.” We can only ensure that ML benefits end users if we investigate, care, and design for their experience right from the start.