As a UX designer with more than a decade of experience working in the field, I’ve seen the profession evolve dramatically. I remember when the primary UX battles were fought over button placement, information architecture, and creating user flows that were as predictable and seamless as possible.

Meeting the standard meant designing clear paths from A to B.

Enter Artificial Intelligence. Today we find ourselves designing for systems that chart their own paths—from A to somewhere near B, C, or even Z—all based on a logic we can’t always see.

This is the new frontier of AI design, which demands a profound shift in our role. Our challenge is no longer, “Is it usable?” but rather, “Is it responsible?”

Figure 1: Peeling back the layers (generated with Google Nano Banana™ and Gemini).

From Interface to Influence: Designing for Trust in the Black Box

The main UX problem with most AI is its opacity. AI requires users to trust a black box that makes decisions affecting their lives, from the news they read to the opportunities they see. How do we, as designers, build that bridge of trust?

Figure 2: The AI black box (generated with Google Nano Banana and Gemini.)

It starts with designers championing transparency.

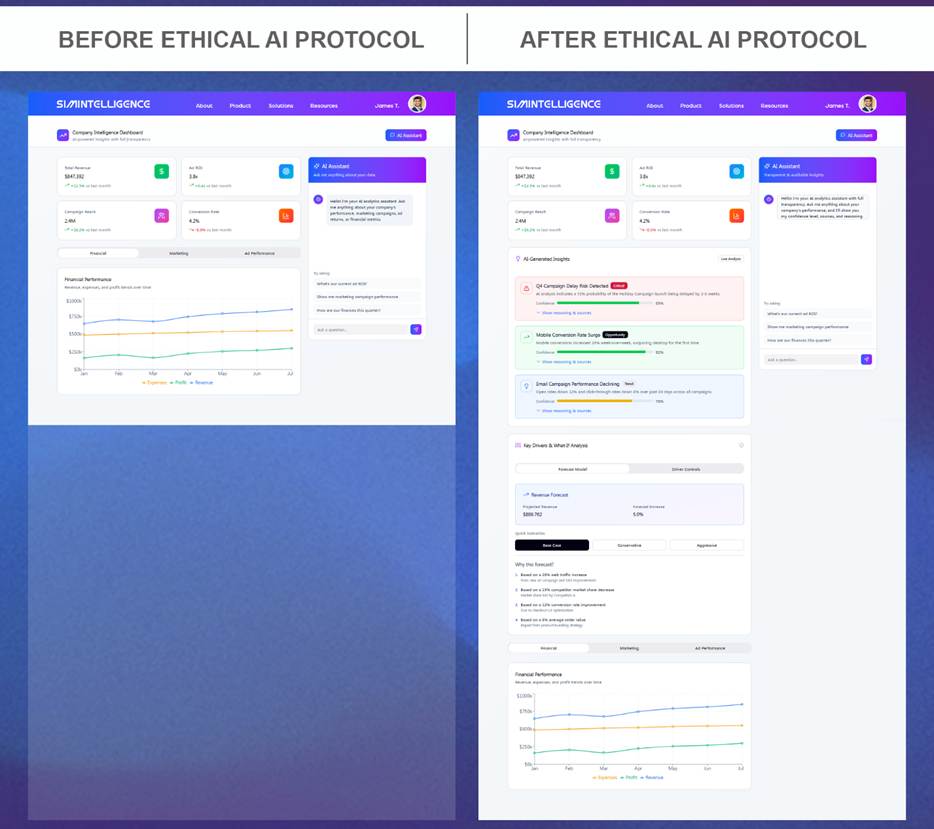

In a past role, I was part of a team that developed an AI-powered software whose primary source of information was the company’s deep well of tacit, intangible knowledge. Our goal was to synthesize vast amounts of business intelligence into actionable insights for executives. Early on, we hit a classic AI-UX roadblock: Users didn’t trust AI recommendations. The AI was brilliant, but its outputs felt like magical proclamations. Users would ask, “Why should I trust this? How did it get here?”

Our solution wasn’t a better algorithm; it was a better UX. We implemented features that revealed the internal workings, however slightly. We designed key driver modules that visualized the top three data points influencing a recommendation. We added sliders that allowed users to adjust variables to see the AI’s forecast change in real time. We didn’t show them the raw code, but we gave them a sense of control and a narrative for the data. We learned that, for AI, good UX isn’t just about ease of use; it’s about building a foundation of explainability and trust.

The Ethical Crucible: Our Responsibility as UX Professionals

As we design these influential systems, we inevitably find ourselves standing in an ethical crucible. An AI is only as good—and as fair—as the data it’s trained on and the interface through which it interacts with people. Therefore, UX professionals must act as the system’s conscience.

Ethical challenges are not abstract; they are concrete design problems:

- Bias as a user experience: When a hiring algorithm, trained on biased data, consistently deprioritizes female candidates, for instance, it manifests as a discriminatory user experience. Our job is to design dashboards and review processes that flag potential biases for human reviewers.

- Privacy as a feature: In an era of massive data collection for AI training, the UX of consent is paramount. We must design clear, honest, and user-centric controls that treat privacy as a core feature, not a legal footnote buried in the settings menu.

- Manipulation by design: AI’s ability to hyper-personalize can be used to amplify manipulative, dark patterns. We have a responsibility to advocate against creating feedback loops that exploit psychological vulnerabilities for the sake of engagement metrics.

I believe designers must move beyond simply avoiding negative outcomes and start designing proactively for positive ones. We must shape the design process into deliberate openings, leveraging UX and AI to design for equity, to ensure the system’s primary goal is to create opportunity, not just to optimize a business metric.

Beyond Seamlessness: Designing for Critical Thinking

For years, the gold standard of UX has been a seamless experience. We strive to remove friction so users can achieve their goals with minimal cognitive load. But what happens when the most seamless path narrows a user’s perspective?

AI-powered feeds can create comfortable echo chambers, shielding us from dissenting views and challenging information. In this context, a little positive friction can be a good thing. As UX professionals, we can design for discernment. Table 1 shows some practical examples.

Table 1: UX Patterns for Fostering Discernment

| Pattern | Description | Example |

| Source Highlighting | Visually distinguish between different types of content or sources. | An AI news aggregator could use clear labels or color-coding to differentiate opinion pieces from reporting. |

| Perspective Surfacing | Intentionally introduce users to credible, alternative viewpoints on a topic. | Below an article, a module could include, “For a different perspective, see this analysis from [Another Source].” |

| Control and Discovery | Provide users with explicit controls to adjust their bubble and discover new content. | A button named Broaden My Horizons could temporarily reset personalization to show more diverse content. |

Our goal shouldn’t be to create a frictionless slide into comfortable conformity, but to empower users with the tools to be active, critical participants in their own information consumption.

Figure 3: Example of transparent UX-AI.

We Are the Architects of Tomorrow

The systems we are building today are shaping the world of tomorrow. Our role as UX professionals has expanded beyond the screen. We are the architects of AI-driven experiences that have real-world consequences. We are the essential humanists in the room, uniquely positioned to advocate for users and society.

So I urge you, my colleagues, in the UXPA and beyond, to embrace this responsibility.

Let’s educate ourselves on the fundamentals of AI. Let’s demand a seat at the table from the very beginning of the product lifecycle. Let’s champion design that is smart and usable, and also transparent, ethical, and empowering. The future is being coded as we speak, and it is our collective duty to ensure it is designed with humanity at its very core.

Lucas Peixoto is an AI-UX System Architect. Throughout his career, he has applied his architectural and strategic vision to innovate in the design and management of user experience systems that integrate design with Artificial Intelligence. With a specialty in translating complex IT and business requirements into scalable structures and predictive (AI-driven) experiences, his designs are both intuitive and high-impact.

User Experience Magazine › Forums › Ethical by Design: Why UX Is the Critical Conscience of AI