Once a cloud product has been launched, product teams often turn to site analytics to determine their success or to identify potential problems. But data from analytics isn’t always enough to explain why users responded in a particular way. In order to find the “why behind the what,” more qualitative data is needed to understand if customers are satisfied and what, if anything, is decreasing their satisfaction.

Common Sources of Customer Feedback

There is a pretty short list of how product owners go about getting qualitative customer feedback when they’re ramping up a new produce or feature. These can include:

- Customer calls – Frequently a product manager will email or call customers directly to ask for feedback.

- Social media mentions – Using forums like Twitter, Quora, or Stack Exchange can be great sources of feedback.

- Ad hoc surveys and focus groups – These are isolated efforts, whether it’s a one-time online Survey Monkey or a meeting with several customers at once.

- In-product feedback surveys – Inviting users to take a survey through an intercept or other call to action from within the actual context of use of the product.

Requirements for Customer Feedback Systems

During my career I have had to work with customer feedback from all of these sources, and along the way I’ve developed a set of requirements for customer feedback systems to ensure success. Whether building a process for collecting feedback in-house from scratch, or buying a system from a vendor, the system will be most valuable if it has the following six characteristics:

- Validity – The end goal is to deliver unbiased, trustworthy, and actionable information to the people who need it in the organization.

- Deliverability – Team members can easily access the data on demand, or it can at least be shared automatically with team members. It can’t just wind up buried in someone’s email account or on a system that only a few people have permission to access.

- Retainability – The knowledge won’t disappear or get overwritten—it’s retained by the company so it can monitor progress over time.

- Usability – Collecting feedback about a user experience shouldn’t cause people to give us bad feedback about that experience. If using an intercept tool, the system has to provide the ability to customize the call to action to deliver feedback and avoid calls to action that interrupt and annoy users who are trying to get work done.

- Scalability – The system must be easy to implement each time a new service or feature is added to the site or application. Implementing the feedback system must not require significant development resources and analyzing the data should be relatively easy once the feedback system is in place.

- Contextuality – As discussed by D. Schacter in the book The Seven Sins of Memory, recall deteriorates over time, so data gathered within the actual context of use is more reliable than data obtained some time after an experience. To limit memory errors, the affordance for giving feedback ideally should be accessible from within the actual experience, enabling the collection of data based on what’s in front of the user rather than based on what they can remember when they’re not looking at the system.

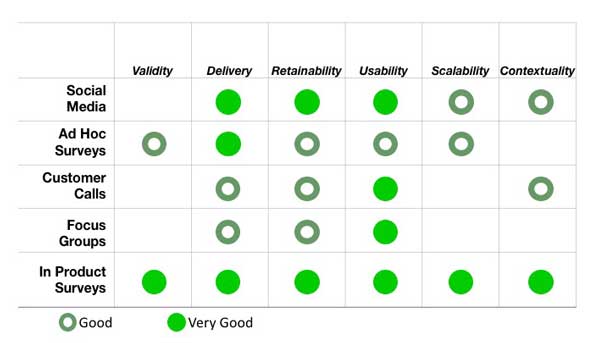

In Figure 1, we can look at the various approaches for obtaining user feedback against this list of requirements and see how they stack up.

- Social media is easy to share and keep around if it’s recorded. Since it’s separate from the user interface it probably won’t have a negative impact on it. It’s possible that a tweet or forum entry will be written near the context of use, but the validity of the data is questionable. Since these forums are open to everyone, not just customers, the opinions aired aren’t necessarily valid. They may be eliciting opinions from people who haven’t even used the product.

- Ad hoc surveys can be scalable (if the instrumentation is reused); they are often more efficient than eliciting feedback through customer calls, and it’s fairly easy to share the results of a survey with internal teams. If users aren’t being solicited via a popup on the interface, then they’re not negatively impacting the user experience (although customers may get annoyed if they’re receiving too many requests to participate in ad hoc research studies.) But emailed surveys aren’t usually completed in context of use, and since not all product owners are skilled at writing good survey questions or facilitating focus groups, these approaches may not result in the best quality data. Finally, the ad hoc nature of these surveys may make it difficult to aggregate and monitor feedback over time.

- Customer calls are a great approach for building personal relationships with customers,but they are expensive in terms of the product manager’s team; once the product release scales up, it can be difficult and time consuming to aggregate and share information from customer calls around the organization. For enterprise systems, product owners tend to spend more time calling decision makers rather than end users. And calls are subject to a variety of social pressures which tend to elicit biased responses. While it’s possible to solicit interviews in the actual use context with a tool like Ethnio, most of the time I’ve seen product owners schedule customer calls via email, so this approach usually preserves the user experience at the expense of gathering feedback in the context of use.

- Focus groups lend themselves to written reports and recordings that can be retained and shared internally, and these efforts won’t interfere with site’s usability. But focus groups are conducted outside the actual use context and are usually based on small samples. Without a strong unbiased focus group facilitator, feedback tends to skew towards the opinions of the most vocal or attractive person in the room, so the validity of this approach may be challenged by stakeholders.

- Product feedback surveys hosted within the product interface are the most likely to meet the requirements of Validity, Deliverability, Retainability, Usability, Scalability, and Contextuality for web and mobile products. They are delivered within the context of use, and reports can be retained, shared, and delivered easily to stakeholders. The call to action can be designed to be available without being distracting from the main goal of a feature, and depending on the technology used for deployment, surveys can be easily customized to apply to variety of product features.

The Customer Feedback Cycle

To get data from within the product context, some companies build their own systems for collecting feedback in the context of use, and others implement some form of on-site feedback surveys on their websites or cloud applications. There are dozens of companies offering a variety of tools for collecting qualitative feedback from site users. But implementing a feedback survey is only the first step to discovering actionable insights. If not handled well, collecting customer feedback can have detrimental effects on the overall customer experience. By itself, data collection isn’t enough; it’s what gets done with it that matters.

For example, when users need help with a product, they don’t always try to find the relevant department in the organization. Instead they ask for help in whatever channel is most expedient. Often that’s a social media channel or the feedback link they see when they’re logged in. If a customer submits a request for help via a feedback channel and no response is made, that experience can have a damaging impact on that customer’s overall satisfaction.

To avoid these bottlenecks, organizations need to adopt processes for managing and integrating feedback from multiple channels, including survey responses, as well as social media mentions, call center feedback, and other sources.

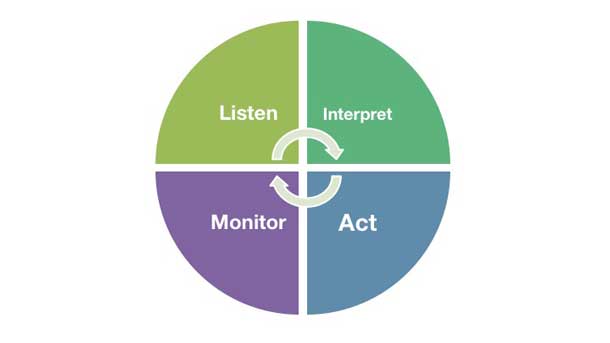

Bruce Temkin of the Temkin Group has written extensively about how customer feedback gathering is really a four stage cycle. According to Temkin, listening to your customers by collecting user feedback is only the first step. In step two, teams interpret the data to find the meaningful insights from it. During step three, a response is required—- some action taken based on those insights. The fourth step is to continue to monitor feedback, tracking it over time to discover if those actions had a positive impact on the business. (see Figure 2)

“By itself, there’s no return on the investment for collecting customer feedback. Value comes from deriving useful insights and closing the loop with the customer.” It’s the third and fourth steps that drive value and allow that value to be measured.

Responding to feedback includes responding to users directly, or taking action based on their feedback. While this may be as simple as fixing a bug reported, insights from feedback may also influence the design for new features demonstrate a need for new customer documentation, or inspire other improvements to the user experience. These insights may even identify new business strategies.

And closing the loop with the customer builds value; letting people know they’ve been heard helps companies develop deeper relationships with their customers. Sometimes a simple response letting a customer know their concerns have been heard can turn detractors into staunch advocates.

How Customer Feedback Supports Business Goals

There are three things that software as a service companies want to do to make money:

- Acquire new customers by communicating the value proposition to prospects.

- Retain existing customers often by resolving account issues or bugs, by delivering a quality experience, and through building relationships.

- Upsell existing customers by deepening those customer relationships and by designing and delivering product enhancements that customers are willing to pay more to use.

The product feedback that is captured online is usually about one of four things:

- Billing or account problems – Responding to these problems is critical to retaining existing customers. If possible, these can be automatically forwarded to a support queue. If that’s not technically feasible, including language in the feedback survey that directs feedback respondents to the correct place for contacting support will help keep account inquiries from languishing in a queue somewhere waiting for a response.

- Problems using the system – These also need to be resolved quickly to retain customers. Sometimes this is a matter of improving user education in the product itself or in support documentation, but these issues may also represent upsell opportunities if specific solutions are available to solve a particular problem. So these issues should be shared with the documentation and product teams, as well as customer support, and must be triaged to determine if they should be shared with a sales team as a potential lead.

- Suggestions for improving the product – Usually these offer insights into potential new features or ways to solve problems for new target markets.

However, these suggestions must be triaged to understand if it’s a new suggestion or an opportunity for user education by the customer support team (occasionally a user will offer a suggestion for a feature that already exists in the product.) In either case, these ideas must be shared with the product team so they can inspire improvements to the discoverability of existing features or to the development of new features. - Compliments or other positive remarks – Positive feedback does more than make product teams feel good about what they’ve shipped. It also helps companies communicate their value propositions more effectively so they can acquire new customers. Finding out what customers value in the product can help marketing teams identify customer advocates they can call on to promote as successful case studies. Positive feedback also sheds light on what aspects of the product resonate the most with customers, which may inspire new marketing messages. Any compliments emerging from customer feedback surveys should be brought to the attention of the marketing team as well as the product team.

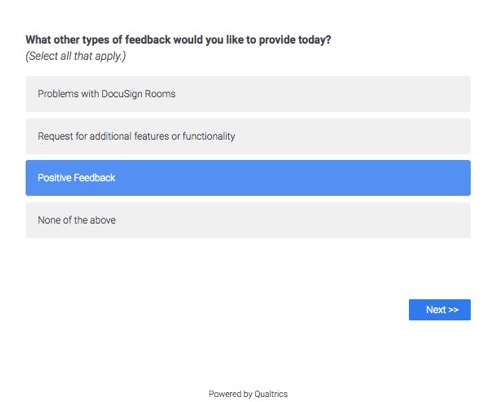

These business goals and four feedback use cases help inform the creation of more efficient systems for managing and responding to user feedback. Putting all answers into a single bucket that has to wait for an analyst to triage the information is not the most effective design for a feedback system. Designing feedback surveys so they can categorize user comments according to use case allows the development of systems and processes that enable feedback to be acted on efficiently. (see Figure 3)

While human intervention—reading and categorizing feedback—will still be necessary in some cases, asking users to help categorize the type of feedback they wish to provide helps speed responses by removing the need for a human to triage every piece of feedback that comes in before getting it to the right team for a response.

Once they’re sorted into separate categories, the feedback can be delivered directly to the right stakeholders and even channeled right into a company’s CRM where everyone who needs to can access the customer responses. Not only does this approach speed up response time and improve the experience for customers sending the feedback, but it also improves the overall utility of feedback as well.

Ultimately, to design a customer feedback system that works, it’s not enough to only think about the user interface for collecting feedback. It’s necessary to understand the goals of the user and the goals of the business to design an effective platform for delivering customer feedback.