Virtual assistants are still in their infancy, but UX designers in the healthcare sector are investigating voice-driven technologies to improve the user experience of electronic health record (EHR) systems.

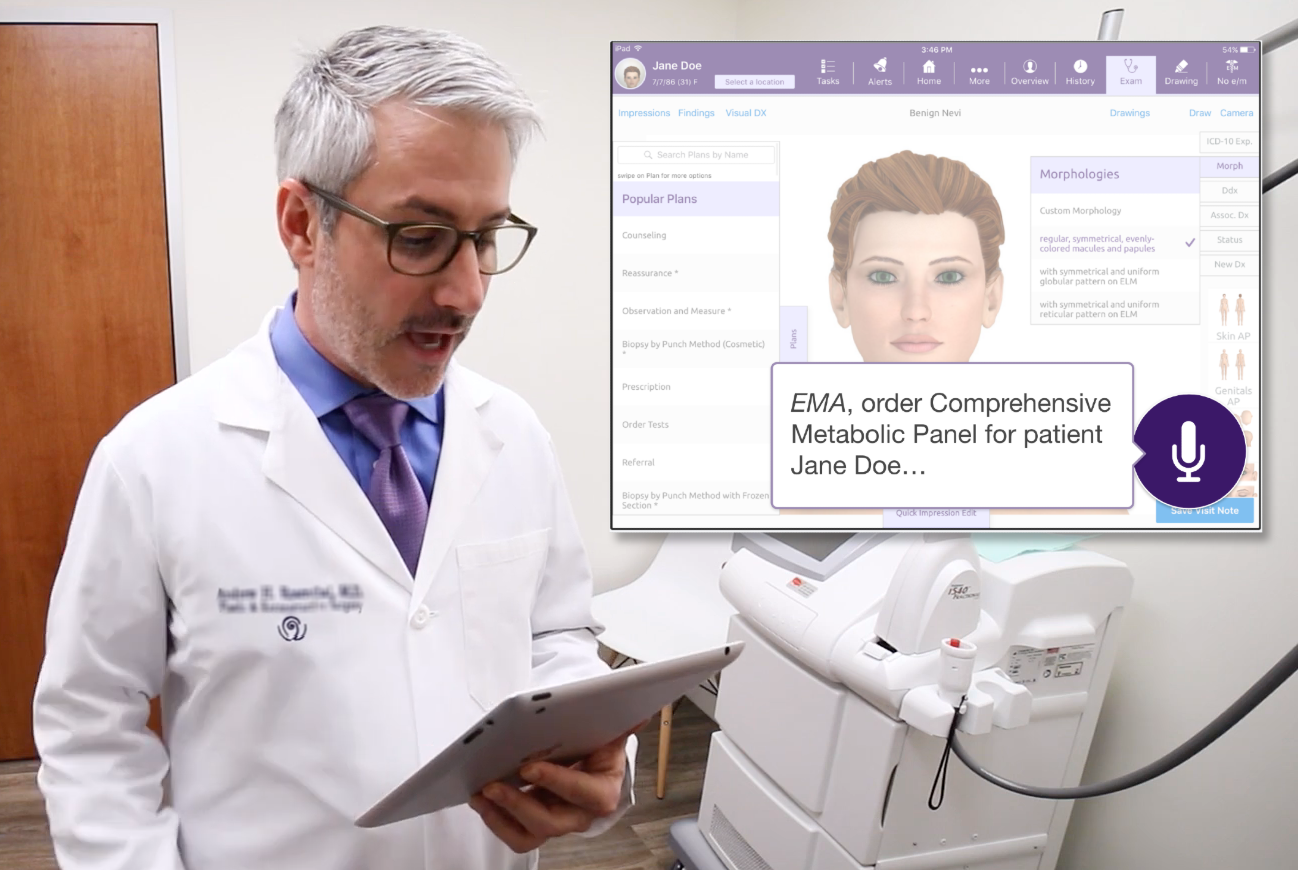

Figure 1. Physician interacting with a prototype for a virtual medical assistant.

Physician Burnout Is (in part) Caused by Medical Software

Physician burnout is a current challenge in the healthcare industry. There are a lot of hoops that medical staff need to jump through due to a combination of government regulations and information needed for insurance reimbursement. These requirements contribute to already complex and extensive workflows within an electronic health record (EHR) system. A study conducted by the American Medical Association (AMA) found that cumbersome EHR systems were identified as the leading cause of physician dissatisfaction. They found that physicians spent two hours in an EHR system for every hour spent with a patient, with the work often spilling over after normal work hours. During a visit, half of the time is spent facing a screen instead of the patient.

In the article, “Taking a User-Centered Approach to Solving Physician Burnout,” I discussed what we learned from representatives of major medical organizations about issues that contribute to physician burnout and what the healthcare UX community can do to help. Several themes emerged from this research:

- Improve system workflows: match EHR workflows to how clinicians practice medicine.

- Reduce data entry burden: look for opportunities to streamline documentation.

- Decrease information overload: identify ways to better present information and reduce alert fatigue.

The UX healthcare community still has work to do to improve the user experience of medical software. The complex nature of medical documentation combined with numerous legacy systems is making this a slow process, resulting in physicians seeking alternative technologies to solve their problems.

Voice Assistants Are Gaining in Popularity

Voice assistant adoption has been increasing in the general population. According to the Pew Research Center, in 2017, nearly half of Americans reported using voice assistants, primarily on their smartphones. This number will continue to increase as voice capability is baked into technologies that we use in our daily lives.

Physicians have been using dictation software such as Dragon by Nuance and Fluency Direct by M*Modal (now a part of 3M) for years. These tools allow the user to transcribe a medical note word for word, but do not provide any task-related functionality such as electronically prescribing a medication. Doctors are seeking new ways to optimize their productivity while not sacrificing interaction with their patients.

- According to DRG Research, 23% of U.S. physicians already use voice assistants at the office (mainly Google and Siri) for both work-related and personal tasks.

- Out of 10,000 U.S. clinicians polled by Nuance in 2017, 80% believe that virtual assistants will drastically change healthcare.

DRG Research also found that physicians liked voice assistants because of the time-saving benefits and hands-free capabilities. Interacting with voice allows physicians to use their devices during a sterile procedure without taking their gloves off. Given the potential for a virtual assistant to be the doctor’s “right arm,” we wanted to better understand the specific use cases and opportunities to make voice technology commonplace in the exam room.

Our Experience Designing a Virtual Medical Assistant (VMA)

As we look ahead to the future of healthcare, we realized that traditional forms of interacting with medical systems via keyboard, mouse, and touchscreen will not solve the problem of physician burnout. Our UX team has been investigating voice-driven user interfaces as a way to supplement and possibly someday replace the need for traditional interfaces. The goal for a VMA would be for it to understand what the doctor wants to do and execute on the task without the need for the user to tell the system what to do step-by-step.

Conducting User Research to Better Understand User Needs and Opportunities for a VMA

Our UX team conducted user interviews and contextual inquiries to better understand how a voice interface could fit into a physician’s workflow and to identify opportunities for them to benefit from this technology. We visited several medical practices to see how the staff interacted with our EHR. This helped us to better understand the situations where a VMA could save the user time by automating tasks that would normally take several steps to perform using our system. We were also able to witness the dynamic nature of a practice environment and realized that our VMA would need the flexibility to perform relatively short tasks (e.g., refill a prescription) as well as understand the context of what the doctor was doing (e.g., a specific patient, visit, exam room number, etc.). The VMA would have to be intelligent enough to know when to ask for clarification and ultimately be able to follow a conversation.

Figure 2. A medical assistant using an EHR system to document a patient’s exam.

Some of the voice-driven tasks that our team identified that could save physician’s time included the following:

- using a smartphone to ask a virtual medical assistant about the physician’s upcoming schedule and information about their next patient

- instructing a VMA while in the exam room to pull up imaging to show to the patient

- having a VMA perform tasks such as prescribing a medication, ordering labs, assigning tasks to staff, or setting a reminder

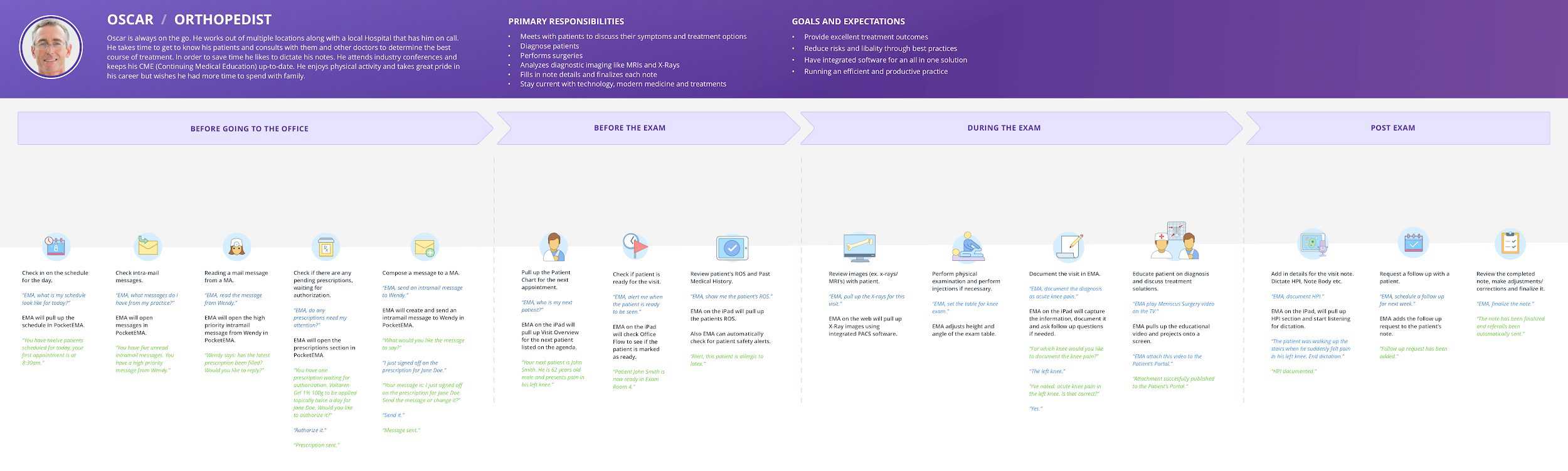

Our journey map (Figure 3) shows the interactions that take place in the context of a typical patient visit. Each segment of the journey highlights what the doctor needs to accomplish, what they say to the VMA, and how the VMA responds to the command.

Our EHR is designed to work with multiple platforms, and therefore, we wanted our VMA to work with laptops, tablets, smartphones, and smartwatches. We also introduced a smart speaker into the exam room for situations when the user might not be directly in front of a computer and wants to perform tasks exclusively using voice. The smart speaker could also control and interact with other devices in the room such as a smart TV.

Figure 3. A physician journey map for interacting with a voice user interface.

Designing a Voice-Driven User Experience

Once our team had an understanding of what our users wanted to accomplish with a VMA and how they expected to use it, we created a series of task flow diagrams and sketches. To see if we were on the right track, we validated the approach with our team of in-house doctors and medical staff.

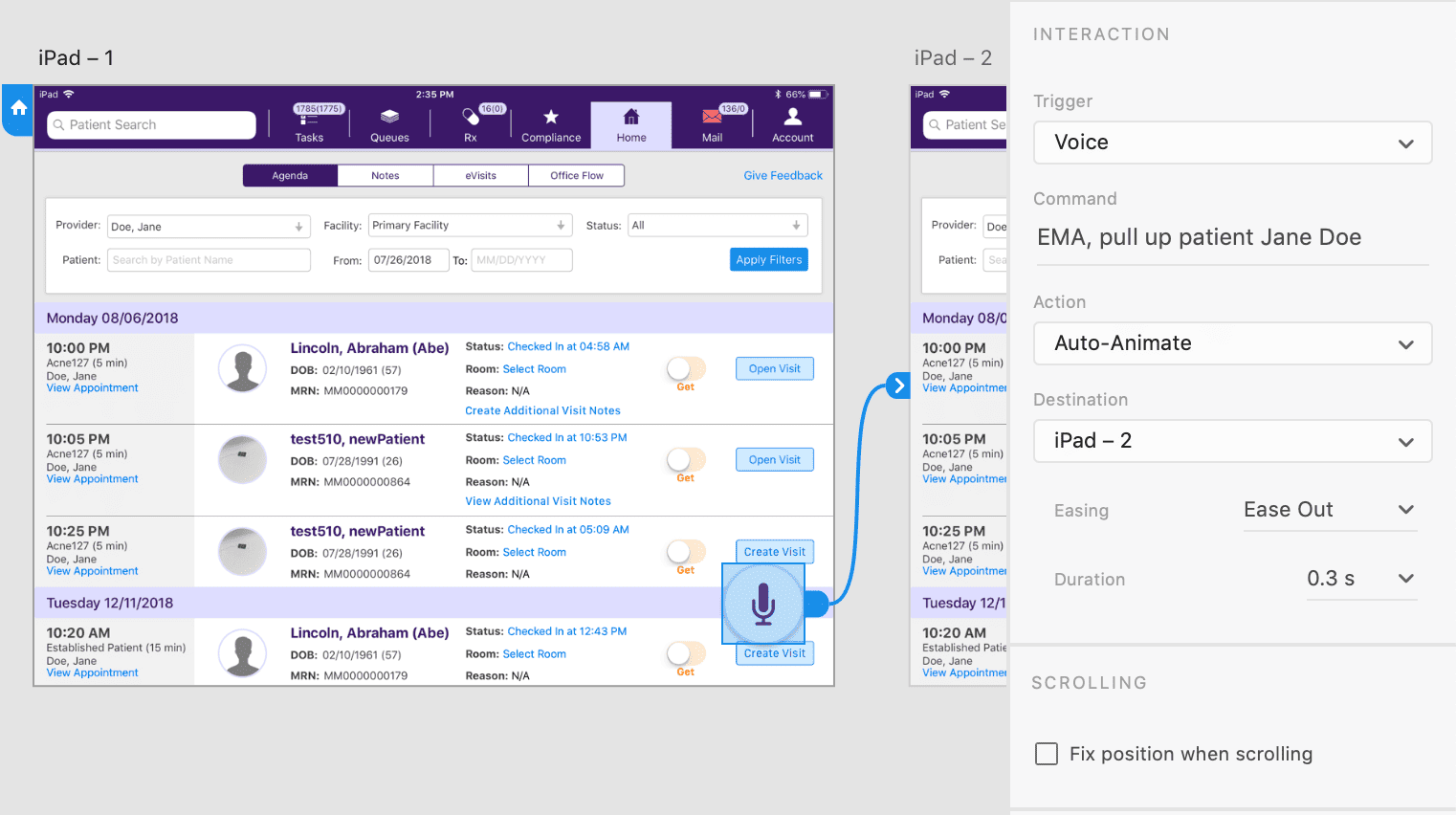

Our prototype utilized Adobe XD’s new speech recognition and voice capabilities to let users interact with our VMA using their voice. It was surprisingly easy to configure the prototype in Adobe XD for voice interaction; however, a major limitation of the tool requires the user to hold down the spacebar (on a computer) or hold tap on the screen (on a mobile device) to initiate a voice command. This is likely so that the commands would not be triggered accidentally in a prototype environment. We envision our VMA to actively listen in the background without the user needing to initiate a voice command by pressing anything.

Figure 4. Setting up voice triggers and commands using Adobe XD.

Next, we plan to get some feedback from our users. We plan to conduct usability testing with our clients at our upcoming user’s conference to see how the prototype performs. Our goals for this research are to determine the following:

- Will our VMA seamlessly fit into a medical professional’s existing workflows?

- How easy or difficult is it to use voice commands to complete common medical tasks?

- Will our VMA improve the efficiency of accomplishing tasks that currently require a series of steps within our EHR?

We are very curious to see how people will react to using our EHR with their voice. The outcome of this research will help us to further iterate on making our VMA easier to use and increase the efficiency of common tasks. The user feedback will also help us plan our future product roadmaps to include new voice-driven functionality.

Figure 5. Video shoot for our Virtual Medical Assistant concept.

To help our stakeholders imagine what the VMA experience would be like in a medical practice, we created a proof of concept video. The video, set a few years into the future, shows a doctor performing their routine tasks using a VMA in different environments within a practice including an exam room and their private office. We found that this video built excitement for this future technology and helped our internal teams to better understand how doctors would use it by having a person act it out.

Considerations for Designing a Voice-Driven User Experience in Healthcare

Designing a voice-driven experience in the healthcare industry presents additional challenges for UX designers including the following:

- Patient privacy: There are strict laws to protect patient information under the U.S. HIPAA guidelines. When developing VMAs, this needs to be taken into account. For example, when the user speaks to the VMA and when the VMA provides information out loud to the user that may contain patient-identifiable content.

- Patient safety: There needs to be safeguards in the system to prevent accidental actions that could harm a patient (e.g., prescribing the wrong dosage for a medication). When there is a potential risk to patient safety, the VMA should always confirm the command with the user.

- Learnability: The VMA user experience should match the user’s mental model and ensure that the system performs in a predictable way. The user should not have to learn the VMAs vocabulary and should support natural language processing.

- Context: The medical environment is a very dynamic and noisy place; make sure that the system adapts to the environment where it will be used. The VMA should correctly identify when the primary user is speaking and distinguish the user’s voice from other voices.

These challenges should be considered early and often throughout the product development cycle as they have significant design, development, and legal implications.

Next Steps for VMAs and Reducing Physician Burnout

Based on what I have seen in the industry, I predict that virtual medical assistants have the potential to operate as an ambient presence in a medical facility. As VMAs mature, they will be able to handle more complex tasks that are currently the most time-intensive and disruptive to a doctor’s workflow. Voice technology will be at the core of the user’s experience with VMAs and will ultimately drive the interactions with all of the other clinical and office equipment. Artificial intelligence (AI) will eventually be able to analyze the patient, doctor, and medical staff conversations to document structured data in a visit note and provide clinical decision support. This will help doctors focus on providing better patient care while reducing the burden of administrative tasks.