Progressing a user-centric culture within an organization can be a challenge—mainly, shifting thinking and understanding of the product development process toward the user. I started as the first UX professional within an established mid-sized FinTech company just over 4 years ago, and have helped introduce the organization to user experience methods. Starting with the basics, we have been moving toward a user-centric focused culture using a variety of methods working together:

- Initially, I created and ran workshops to introduce user research and design basics to product owners/managers who were less knowledgeable on these subjects. The goal was to empower them to use various methods to create more user-centric products.

- Next, I worked in conjunction with product owners/managers to wireframe some new concepts—again, thinking more user-centric. I also provided training to conduct research and analysis and how to incorporate findings into designs/redesigns/features.

However, there was still a gap in consistency. With all of this training, we were not consistent with user-centric implementation. So, we created a rating system for ensuring a user-centric rhythm. The rating system, which involves a scorecard and UX coaching, was a way to track and measure user-centric efforts and improvements. Still working with product owners/managers, the scorecard usage would track teams’ user-centric efforts. Tracking would help increase user experience maturity.

User Experience Maturity

User experience maturity is the degree to which members of an organization are focused toward the user at all stages of the product development process. A good user experience does not happen by accident; it takes careful, thoughtful understanding of the user from the entire team creating the experience. Think of a solid user-centric team, from designer to product owner to engineer, like a jazz band that works well together to create great music or a baseball team that works together to gain the World Series title. All members are focused toward the same goal.

Figure 1. Jazz band GoGo Penguin working together to create great music.

The User Experience Maturity Model

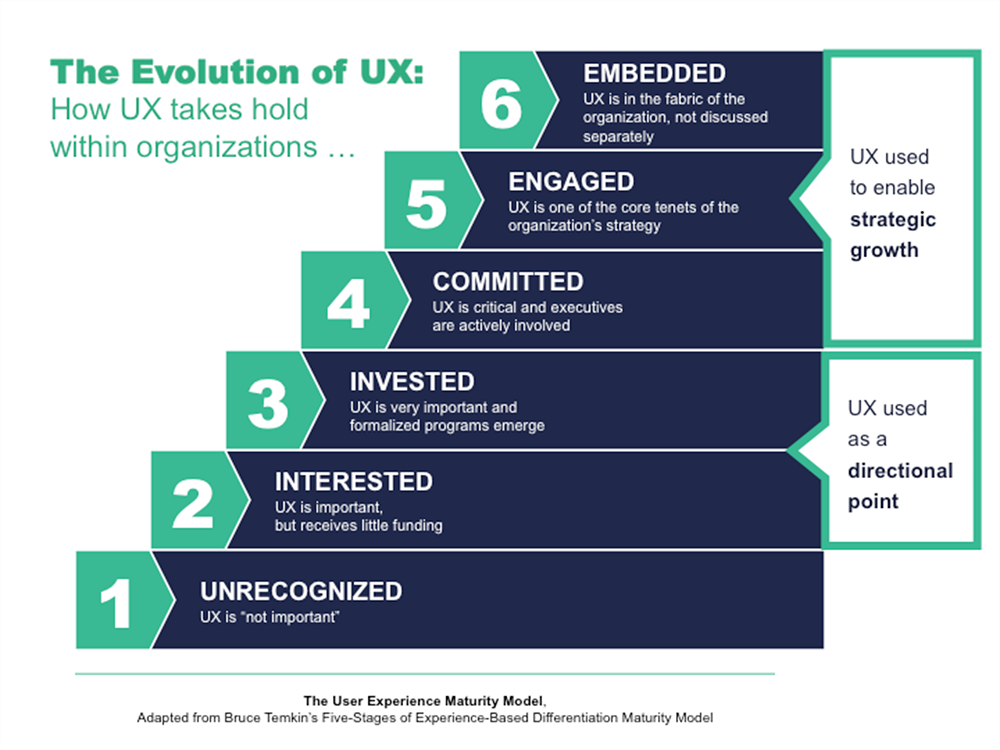

User experience maturity models, which focus on how well organizations work together to achieve the best user experience possible, have been part of UX culture since the 1990s. Maturity models created by G. A. Flanagan (1995), Jonathan Earthy (1998), Jakob Nielsen (2006), Bruce Temkin (2007), and Leah Bulley (2015) display various stages in a three-, five-, or even eight-stage process. Each stage shows the level of user experience commitment and thinking within the organization. The models usually start with “1” representing little or no user experience commitment/thinking and move up to the top stage, in which UX is part of the organizations’ decision making as a whole or embedded within the culture.

These models prove useful in gauging the user-centric focus of the organization. Each stage of the model represents a move toward making UX part of the fabric of the organization. This model displays how UX can move from minimally executed at the lowest stage up to the highest “embedded” stage, where “UX is in the fabric of the organization, not discussed separately.” Although the models are useful in gauging the user experience maturity of an organization, there is less focus or direction in how to increase the maturity or move the UX maturity of the organization up, for example, from a 3 to a 4. There also are not many models for sustaining the organization at a 5 without devolving to a 3 or 4.

Figure 2. One of the many popularized user experience maturity models. (Credit: Brianna Sylver, adapted from Bruce Temkin’s Five Stages of Experience-Based Differentiation Maturity Model)

Why Increase User Experience Maturity?

As the model above as well as other models with a similar purpose show, the goal is to increase and maintain user experience maturity. Increased maturity means a better experience for customers, which often results in increased customer satisfaction and overall sales of the product.

“Increasing your organization’s UX maturity enables you to better deliver the combination of functionality, aesthetics, and usability that can lead to increases in customer satisfaction, higher sales due to ease of use, and decreased support calls” (Lorraine Chapman and Scott Plewes, 2018).

Implement a Rating System

With the goal of increasing our company’s user experience maturity, we first determined how to track user-centric efforts within each product. We attempted to answer the question: How do we get product owners/managers, who are busy writing user stories and managing products, more focused on improving the user experience for their own products?

We concluded that our system needed to help product owners measure their user-centric efforts to better their products for their customers. As product owner/managers release features and updates to new and existing products, the responsibility ultimately falls on them. We determined that creating a quantified user-centric scorecard could help our organization to move up the maturity ladder.

Creating the Scorecard

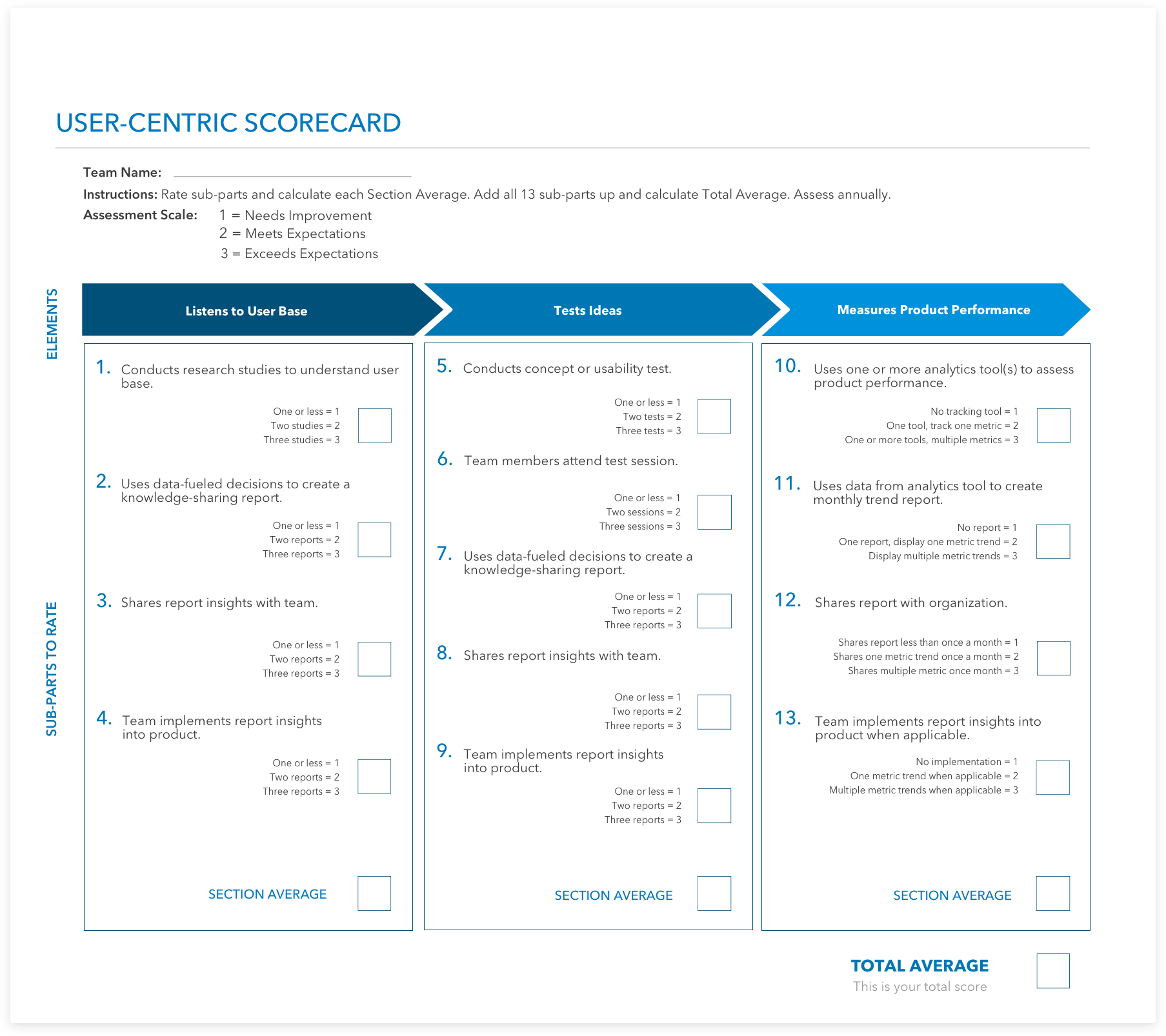

We created the scorecard tracking system, quantifying elements of user-centric efforts in the design process. Reflecting the necessary phases of good product development, we broke it down to the following three elements:

- Listening to and understanding the user: How well do you know your user base?

- Testing new designs and concepts: How much and how often do you test your ideas with users?

- Measuring post-launch value: Is your product providing value to the users?

Next, we created a method of measurement using the 1–3 scale already present within our company’s culture (used for employee assessments): 1 = needs improvement, 2 = meets expectations, and 3 = exceeds expectations.

We decided what to measure by determining the most important elements of the three phases. For example, in measuring how the team is “Listening to and understanding the user,” we broke down how the product team did the following:

- Conducting research and collecting user data, such as customer interviews

- Analyzing the data to create insights

- Sharing insights with all team members, so understanding of the customer is clear

- Implementing the insights into the product

The scorecard focuses on the output of the items above and how they are being conducted. For example, if a team is conducting research and learning from customers, they need to create a report, share it with other team members and stakeholders, and if applicable, implement the insights into the product. Writing a report allows other members of the team, such as software engineers, to be right there with the customer. The closer all team members are to the customer voice, the better the user experience. In the second element, “Testing new designs and concepts,” one item focuses on team members attending concept or usability testing sessions. Again, bringing team members, especially software engineers, closer to the testing experience allows them to get closer to the users and empathize with them.

Overall, the creation of the scorecard was trial and error. The UX team worked closely with various product teams to gain feedback on what was to be measured, how it could be measured, and the best way to assess the three elements. The idea of the scorecard was to quantitatively assess the user-centric efforts of each team so that we could collectively improve. As management guru Peter Drucker states, “What gets measured, gets managed.”

Figure 3. MSTS User-Centric Scorecard after numerous alterations/workshops. It is designed to be scalable so as to work with various-sized organizations.

Implementing the Scorecard System

We worked with product owners to implement the rating systems in phases. We first met with the product owner and lead engineer of each team to discuss the system and the goals for the teams and the organization in general. Next, we attended standup meetings to learn the culture of the team, current projects, and whether they used user-centric labels and language. Third, we worked with the product owner and lead engineer to assess and rate the 13 parts of the scorecard.

We first used a period of 6 months to assess the system, but soon discovered that was too short a time for the teams. For example, some teams were building during the majority of the timeframe we assessed, and they had done research and testing prior to the assessment period. So, we opened it up to an annual assessment period and made what was being measured within each element of the scorecard clearly understood. (See scorecard in Figure 3.)

After the first assessment, we determined which element to focus on for improvement. In looking at the three lowest scores, we decided to focus on improving only one of the three so teams would not be overwhelmed. Collectively, we put a plan in place that would work for each team, if a plan was needed. Also, after the assessment, we created a report displaying the scores for each team and shared the scores with leadership to promote awareness and transparency.

Since starting the scorecard system program, we have been working to make user experience maturity a focus of the organization, which in turn will hopefully result in better user experiences. Our hope is that making the user experience better for customers will ultimately result in increased customer satisfaction, lower calls to support, and an overall increase in sales.