The science fiction author Neal Stephenson has said that today’s science fiction is fixated on nihilism and apocalyptic scenarios. It’s an interesting perspective, and you can read Annalee Newitz’s article reporting on this view in Smithsonian magazine (http://bit.ly/xypo6q). Stephenson gave us the wonderful “Young Lady’s Illustrated Primer” in his book The Diamond Age (1995). The educational and inspirational device understood its user, tailored its language and delivery accordingly, and helped the low-class protagonist, Nell, to blossom and become a leader.

Science fiction has suggested many wonderful devices, sometimes elaborating on existing science (for example, the space elevator in Arthur C. Clarke’s 1979 novel The Fountains of Paradise) and sometimes inventing them (Star Trek’s holodeck). Frequently, the technologies suggested are convenient workarounds for pesky, plot-stifling constraints such as gravity or the speed of light.

Despite Stephenson’s concerns, I believe that the technologies presented in dystopian sci-fi can be interesting, thought provoking, and possibly even salutary. In Aldous Huxley’s Brave New World (1931), citizens are decanted, not born. While the elite Alphas and Betas are individuals, the lower classes are produced in multiples to provide compliant identical individuals. Population is strictly controlled, consumption and consumerism is actively encouraged, and the pursuit of a higher good is managed by provision of the hallucinogenic drug Soma. Technology and social conditioning have been used quite deliberately to create a stable, but ultimately sterile and meaningless society.

While I would not suggest that any proponents of persuasive design would wish to create the “brave new world” Huxley imagines, there are certain slippery slopes down which it is possible to slip, and it’s interesting to consider the uses to which our work in this area might be put. Is it ethical for Amazon to use persuasive techniques to encourage customers to purchase more books? I imagine most of us would consider that to be simply good business practice and that it may even be a social good if it encourages people to read more. However, when applied to online gambling, precisely the same techniques take on a different complexion.

Similarly, the techniques described in Thaler and Sunstein’s Nudge: Improving Decisions about Health, Wealth, and Happiness (2008), and the libertarian paternalism it espouses, can provide great benefits, but Huxley’s voice warning of the dystopian extremes may be worth hearing—in particular when its philosophy is so wholeheartedly embraced by governments. In fairness, British Deputy Prime Minister Nick Clegg did say in relation to the government’s Behavioral Insight Team: “The challenge is to find ways to encourage people to act in their own and in society’s long-term interest while respecting individual freedom.”

The technology described in Daniel F. Galouye’s novel Simalucron-3 (1964) is specifically aimed at understanding people’s behavior. The book was also published as Counterfeit World and subsequently made into the entertaining movie The Thirteenth Floor. In the book, a virtual city simulates the real world. The inhabitants are conscious and unaware that they are simulations. They are monitored for their reaction to marketing campaigns with the findings applied in the real world. An abusive individual from the real world acts out his sadistic fantasies on some of the simulated inhabitants. In the movie, the simulated protagonist at one point attempts to drive out of the city (Los Angeles), but eventually reaches the boundary of the simulation, at which point the city degenerates into wireframe. At issue is also the fact that the real world, it transpires, is in turn a simulation of a higher-order world.

The book alerts us to issues surrounding the potential rights of entities created by us exclusively for our use, but which may acquire, or demand, a status other than the one we grant them. Of course, this is very much in the fore of Asimov’s I, Robot short stories from 1950, and in much of Philip K. Dick’s work. In Orwell’s 1984, as in Huxley’s Brave New World, the client for the technology to control the population was an authoritarian government. The issue of our own moral responsibility as designers can be equally fraught: do we simply design the UX to deliver the product or service that meets our client’s needs, or do we have a greater, or higher, obligation?

There is also the issue of UX designers treating ourselves as favored exceptions. In Roger Zelazny’s 1976 anthology of short stories My Name is Legion, the protagonist has worked on a system designed to enable the government to conduct thorough surveillance of its citizens. By leaving a back door, the protagonist opts out, and lives a life of investigative adventure.

We don’t need a thoroughly dystopian viewpoint to see technology that delivers a poor user experience. A cynic might suggest that much of our current technology does that anyway, but technology in science fiction can provide exemplars of the extremes to which we might inadvertently go. Many of my favorite examples come from American writer Philip K. Dick. In Galactic Pot-Healer (1969) for example, he gave us the “Padre Booth,” A machine that would, upon insertion of a dime, give advice on any question in the religion of one’s choice. Joe Fernwright, who is unemployed, asks the Padre Booth whether he should embark on a possibly illegal and certainly hazardous mission on an alien planet. Dialing Zen, he is told that “not working is the hardest work of all.” The Puritan Ethic tells him that “without work, a man is nothing.” Dialing Allah, Joe is told that “there are enemies, which you must overcome in a jihad.”

In the same book, we also find “The Game,” played by under-employed consultants who run book titles and authors names through multiple “translating computers” and challenge their counterparts to figure out the original. Thus, “Serious Constricting-path” is a famous author. Hint: he wrote The Sun Also Rises. Supposedly, “The Game” originated from a Russian engineering paper that contained, in English, the mysterious term “water sheep” which, when traced to the original, meant “hydraulic rams”.

But Dick is more interesting and challenging when he presents technology gone wrong. In Ubik (1969), Joe Chip, who is perennially short of money, is stuck in his apartment when his door refuses to open for him unless he pays it. He informs the door that what he pays is a gratuity. However, his door replies: “I think otherwise. Look in the purchase contract…” Joe reads the contract, to which he has frequent need to refer. “‘You discover I’m right,’ the door says. It sounded smug.” So here we see technology that not only provides a lousy user experience but is smug into the bargain. As the world around him slips into a uniquely Dickian morass, Joe encounters multiple instances of technological failure and confusion. At one point he orders a coffee, but the shop rejects his proffered payment method. In the ensuing argument, it threatens to call the police, tells Joe that “We can do without your kind” and, to add to the insult, serves Joe a coffee with sour, clotted cream. “One of these days,” Joe tells the shop, “people like me will rise up and overthrow you, and the end of tyranny by the homeostatic machine will have arrived.”

One could argue that we hit that degree of inappropriate behavior with the advent of Clippy, Microsoft’s much hated “assistant” who would make condescending statements such as “It looks as though you’re writing a letter,” or with error messages that begin with “Oops!”

Unless we consciously take control, our devices and applications may have personalities that are ineffective or inappropriate.

The fact that Joe fights back (for example, he begins to dismantle his door with a screwdriver,) reminds us of the fact that the people for whom we design can indeed rise up. Many people who conduct usability testing can attest to a change of heart over the past decade or so. Where previously it was common to encounter people who blamed themselves for their inability to use a website or application, it is far more common now for the blame to be laid where it belongs: at our own door. We do well to remember that the Joe Chips of this world can always take a screwdriver-equivalent to our work.

I often found Asimov’s robots somewhat bland, but in Dick’s world they are imbued with a level of self-awareness that forces us to think about what happens to our machines when they become sufficiently sophisticated. In his 1955 short story Autofac, automatic factories continue to operate long after the need for them has passed. They engage in warfare with other autofacs to gain control of scarce resources, while humans, who no longer control them, scrabble to survive in the wreckage. A similar failure of control is apparent in D.F. Jones’ 1966 book Colossus, in which a giant computer given control of North American defense effectively takes over the world in cooperation with its USSR counterpart. Of course, the theme of the out-of-control creation in science fiction goes back to Mary Shelley’s Frankenstein (1818).

However, whereas Frankenstein’s creation could cause limited damage within a village, Colossus and its equivalent have a power that is truly global in consequence. Eric Drexler’s “grey goo” echoes Dick’s autofac; billions of environmental-cleansing nanobots out of control, converting everything on the planet to harmless dust. Should we be concerned, as designers, with degrees of autonomy? The boundaries are certainly being approached when we consider the use of autonomous drones. Currently “target identification” is left to humans, but there has been military research into enabling this role to be entirely automated. See Peter Finn’s article in The Washington Post (http://wapo.st/UYT2VY). A truly frightening scenario and, of course, this is the very premise of much of the Terminator movies.

In Zelazny’s short story The Hangman, a semi-autonomous humanoid robot, while being controlled by telepresence, kills a policeman. Driven almost insane by guilt, it disappears only to return years later to confront its controllers who (of course!) misunderstand its motives.

Another dystopian view of technology can be found in the 1971 novel Roadside Picnic, written by Arkady and Boris Strugatsky, the source for Andrei Tarkovsky’s stunning movie Stalker, and the more recent game S.T.A.L.K.E.R.: Shadow of Chernobyl. In the novel, aliens have briefly visited and departed. In each of the six visitation zones, they have left behind machinery and artifacts whose purpose and operation are arcane and which can be inert, extremely dangerous, or immensely powerful. In the face of governmental attempts to establish and maintain control over the mysterious zones, “stalkers” enter and risk their lives and their sanity to extract items for sale on the black market. Although written prior to the Chernobyl disaster, the book and film seem strangely prescient in describing a strange no-go zone. Here we encounter a technology so sophisticated that nobody understands it.

As UX practitioners, we strive to hide the complexity inherent in many of the objects we design. We see this as both necessary and appropriate, and certainly this is something I always advocate with my clients. However, perhaps we should consider whether there are unintended consequences of doing so? Do we risk becoming like the Eloi in H.G. Wells’ classic The Time Machine (1895) who, in the year 802,701, live a life of ease and contentment but are at the mercy of the technologically-sophisticated Morlocks who breed them.

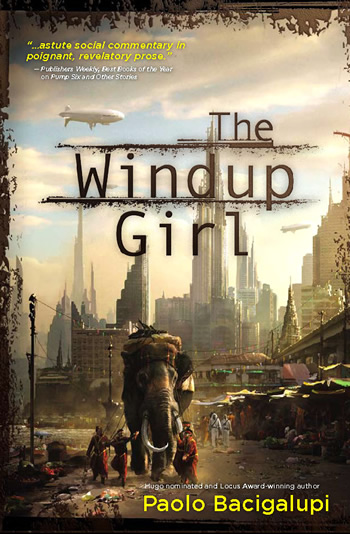

A recent dystopian novel is Paolo Bacigalupi’s The Windup Girl (2009), set in Thailand in a semi-post-apocalyptic world in which global warming has caused immense damage to human society and biodiversity. The protagonist, Anderson, is looking through his rapidly deteriorating books when he comes across a photograph of a fat foreigner bargaining with a Thai farmer at a market in the pre-emergency days, and is stirred to anger by their blithe lack of awareness of the wealth that surrounds them, and its fragility. “These dead men and women have no idea that they stand in front of the treasure of the ages… the fat, self-contented fools have no idea of the genetic gold mine they stand beside.” He wishes that he could drag them out of the photograph and into the present, so that he could “express his rage at them directly.” Bacigalupi presents a vision of the future that is all too plausible.

destroy?

Perhaps such dystopian visions can serve as warnings and motivate us as designers to consider the sustainability of our work practices and our lives in general. In the movie Blade Runner, the bounty hunter Deckard says that “Replicants are like any other machine, they’re either a benefit or a hazard.” The book on which the movie is based, Dick’s Do Androids Dream of Electric Sheep (1968), is more nuanced; “A humanoid robot is like any other machine; it can fluctuate between being a benefit and a hazard very rapidly. As a benefit it’s not our problem.”

As a benefit, our creations are not a problem. Despite Stephenson’s plea for more positive sci-fi, there are plenty writing about the promise of technology and bright futures: Iain M. Banks’ Culture series for example, or Greg Egan’s Diaspora (1997).

No doubt as a society, we need beacons that will encourage us to look outwards and aspire to greatness. But we also need to be aware of the potential for our creations to behave in ways that we may not have envisaged, or be put to uses we may not condone. Dystopian sci-fi enables us to see the bugs in our vision more clearly; thus these works provide us with additional insight we might otherwise not be able to imagine.

Until it is too late.社会的发展需要指向标,它将激励我们开拓视野,追求卓越。我们也需要意识到,对于我们创造出来的事物,我们可能还没有想到它们的行为方式会带来潜在影响,或者被用在我们可能无法容忍的地方。反映负面的科幻小说使我们能更清晰地看到愿景中的缺陷;还可能给我们以意想不到的启示。

文章全文为英文版사회는 우리에게 외부로 눈을 돌리고 대망을 품도록 장려하는 신호등을 필요로 합니다. 또한, 우리의 창작물이 상상도 못할 방식으로 작용되거나 용납할 수 없는 방식으로 사용될 수 있다는 가능성을 깨달아야 합니다. 디스토피아적 공상과학은 우리의 미래관점에서 문제점을 더 뚜렷하게 볼 수 있게 만듭니다. 이 작품들은 우리가 상상도 못할 통찰력을 제공해 줍니다.

전체 기사는 영어로만 제공됩니다.A sociedade precisa de sinais que irão nos encorajar a olhar mais além e a aspirar a grandeza. Também precisamos estar cientes do potencial das nossas criações de se comportarem de maneiras que talvez não vislumbremos ou de serem colocadas em usos que não toleramos. A ficção científica antiutópica nos permite ver os defeitos na nossa visão com mais clareza; essas obras nos proporcionam um insight adicional que de outra forma talvez não seríamos capazes de imaginar.

O artigo completo está disponível somente em inglês.社会は、我々が世界を見て、偉大さへの熱望を奨励するような指針を必要とする。また、我々の作品が思い描いた物とは異なる方法で動作したり、容認できない扱われ方をされる可能性について認識することも必要である。ディストピア的SFによって、我々は将来ビジョンの欠陥をより明確に見ることができ、他の方法では想像することのできないさらなる洞察力を得ることができる。

原文は英語だけになりますLa sociedad necesita señales que nos alienten a mirar hacia afuera y a aspirar a la grandeza. También debemos ser conscientes de la posibilidad de que nuestras creaciones actúen de maneras que quizás no habíamos imaginado, o sean destinadas a usos que no podríamos aceptar. La ciencia ficción distópica nos permite ver con más claridad los errores en nuestros planes. Estas tareas nos aportan una visión adicional que de otra manera no podríamos ser capaces de imaginar.

La versión completa de este artículo está sólo disponible en inglés.