Digital gaming is the largest entertainment industry in the world, growing at a rate of around 15% per year and reaching $137.9 billion (USD) in annual revenue worldwide. The composition of this industry is incredibly varied, ranging from independent developers working on small mobile games to major commercial releases larger than Hollywood films in terms of production budgets and revenue. Over the past decade, the games industry has advanced substantially in terms of technology, design, and business practices. From the introduction of new platforms (e.g., mobile, virtual reality, augmented reality), to new publishing models (e.g., free-to-play, season passes), and the inclusion of new audiences (e.g., kids, older adults), developers are rapidly adapting to provide engaging experiences for players across these new design frontiers.

In focusing on the creation of fulfilling user experiences, design techniques that involve players in the development process (e.g., user-centric and participatory design) have been receiving more attention from game designers. These efforts helped to establish the field of games user research (GUR) among academic and industry practitioners. GUR aims to understand players (users) and their behavior to help developers optimize and guide user experience closer to their design intent (see my previous article on GUR).

GUR fundamentally relies on iterative design and development processes, where prototypes created by game developers are evaluated through testing with players drawn from the game’s target audience. Insights gained from this process help developers improve their designs until the desired player experience is achieved. User testing (also often referred to as play testing in the game industry) is one key evaluation approach whereby researchers aim to understand player behavior, emotions, and experience by collecting and analyzing data from players interacting with a prototype or pre-released version of a game.

Repeatedly creating high-fidelity prototypes (game builds) suitable for user testing is not only time consuming but also expensive. Moreover, recruiting users and conducting evaluation sessions are labor-intensive tasks. These challenges are especially pressing in game evaluation, where designers may wish to evaluate many alternatives or rapidly measure the impact of many small design changes on a game’s ability to deliver the intended experience. How might we lessen this burden while improving our ability to conduct more representative and comprehensive testing sessions? What if we developed a user experience (UX) evaluation framework driven by artificial intelligence (AI)?

In this article, I highlight our learnings from working on projects focusing on the development of game evaluation tools using customizable AI players.

AI, Games, & UX

Artificial intelligence research has always had a strong relationship with games, often serving as a testbed for the development of novel algorithms. In the 1950s, some of the earliest AI algorithms were developed to play games, where continual advancement eventually led to the development of systems capable of defeating world-class human champions, such as IBM’s famed Deep Blue defeating Garry Kasparov in chess and the Othello-playing program Logistello defeating Takeshi Murakami. In time, the complexity and applicability of AI increased beyond the bounds of playing games to game development, providing reductions in human labor and resource requirements.

Within UX evaluation in games, AI extends the limits of what researchers can accomplish, augmenting human analysis through intelligent interfaces and helping build player models from large data collections. AI supports complex and difficult data analysis tasks, such as players’ recognizing emotional responses.

Investigations of user behavior often rely on extracting insights from massive collections of player telemetry data, such as movement in a game’s world, time to complete tasks, and character deaths. With data from thousands of players, manual analysis may prove impossible. AI helps to improve the efficiency of many of the tedious tasks associated with such complex analyses, like processing large and disparate datasets and predicting behavior. Based on existing data, what future user actions can we predict? Knowing the answer to that question, such predictions may be valuable to project and evaluate user experience for the purposes of creating adaptive game interfaces.

AI has also been used as a proxy for human users in UX evaluation and quality assurance (QA) testing, but questions surrounding user experiences are far more subjective than their QA counterparts. In UX evaluation, the task is often focused on creating agents that “think like humans.” Through the creation and observation of AI agents that obey a game’s rules and/or approximate player behavior, game designers try to better understand how different players will respond to a given game event. The concept of evaluation through deploying agent-based testing has already demonstrated promise as a means to reduce the resource and labor requirements of game testing. Another testing application focuses on evaluating playability, especially the objective features of a game’s content, such as whether it is possible to complete a given level or how many possible solutions exist for a given puzzle.

PathOS

In our lab (UXR Lab, Ontario Tech University), we focus on the development of UX research frameworks and tools that directly support the work of both academic and commercial GUR practitioners in the collection and analysis of gameplay data. One of our current projects is PathOS, an open-source Unity tool and framework that explores the utility of AI-driven agents in game user testing. We are interested in modeling player navigation in games with an independent, configurable agent intended to imitate the spatial (navigational) decision-making of human players. Our goal is to make a system capable of simulating player navigation for a diverse population of AI-controlled agents (users), standing in for early-stage user testing aimed at evaluating level or game stages and design. In particular, we focus on producing customizable models of human memory, reasoning, and instinct pertaining to spatial navigation.

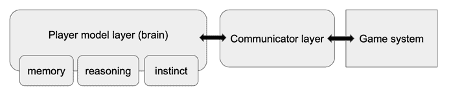

The PathOS framework consists of two key layers designed to work atop any navigational data system (i.e., navmesh or navigation mesh) contained within a given game engine or development framework. These two layers are the non-omniscient player model, analogous to a “brain” or decision-making machine, and an intermediary communicator, analogous to the sensory organs (see Figure 1).

Figure 1. Logical layers of the PathOS framework.

The core functionality of PathOS resides within the brain of the system. While traditional navigation modeling focuses on the generation of optimal paths, we are especially interested in modeling fallible components of human perception and reasoning to simulate issues with player navigation, such as getting lost, missing key areas, or losing track of game objectives.

As such, our memory component will track past actions (e.g., turning) and “visible” information to construct a simulated mental model of player surroundings. Information contained within “user” memory will be mutable and forgettable based on factors such as information volume, action speed, and simulated experience level. Simulated player reasoning will be based on patterns of action available in memory (e.g., having traveled in the same direction for several paces), information given about objectives (e.g., in-game compass indicating goal is to the north), and spatial cues (e.g., placement of walls). And finally, the instinct component aims to help model how personality and past experience might influence navigational behavior by emulating, for instance, an inexperienced player’s tendency to favor investigating safe, open spaces as opposed to potentially dangerous narrow corridors.

The communicator filters information available to the brain from the navigational data, based on player point-of-view visibility. The brain maintains the artificial player’s memory and makes navigational decisions based on information from memory, characteristic play style, and “sensory information” from the communicator. Decisions the brain makes feed into the communicator, which in turn manipulates an in-game agent and logs actions accordingly.

In any given game navigation scenario, players may have a number of factors to consider before making their next move, such as current objectives, hazards or enemies, and a desire to explore. Simulated agents should therefore evaluate available navigational alternatives based on heuristics such as alignment with game objectives (i.e., might this pathway lead to an in-game goal?), potential for danger (i.e., are enemies visible along this pathway?), potential for discovery (i.e., is this new territory?), and number of previous traversals.

What’s Next

Integration of agent-based testing into a commercial development cycle would vary significantly depending on the nature of a given project. For PathOS, we envision the framework as having the greatest utility early in the development process to give developers a coarse indication of how players might experience the virtual world. After creating an initial level design, the framework could be deployed to identify likely navigation patterns of players within that design. Unintended or unexpected features of these patterns may then be used to identify and remedy issues related to world design. For instance, if a developer notes that an intended objective is not reached by the majority of agents, the developer can review a simulated playtrace (movement) to identify whether the unmet objective is caused by poor visibility, a lack of points of interest (POI) in the surrounding area (see Figure 2), or some other factor (e.g., a high density of hazards discouraging cautious players from exploring a given region). Based on the insight gained, the design can then be iteratively refined and retested to validate the intended experience.

Figure 2. Example visualization of paths taken and engagements with POI by different AI-agents in the PathOS framework (Created by Atiya Nova, UXR Lab).

Identifying basic issues early in the development process prevents these issues from propagating to later testing with human users. For example, if the goal of a late-stage user test is to assess players’ experience with a game’s narrative, but players miss interactions with key characters due to basic issues with level design, then the ability of the test to accurately investigate the core research question has been compromised. Testing early with artificial agents to identify these basic issues could help prevent these situations. Furthermore, the pursuit of continuous, informed improvement from a very early stage of development may contribute to overall improved product quality and a better experience for the end user.

Acknowledgments and Further Reading

Recent projects at UXR Lab motivated me to write this article about exploring AI applications in games UX evaluation. I would like to thank and acknowledge the research students who were involved with these projects: Samantha Stahlke, Atiya Nova, and Stevie Sansalone. This article summarizes the key lessons we learned from these projects. For more information, please refer to the following:

- PathOS is being developed as an open-source framework for the Unity game engine. You can download a Unity project containing the PathOS source code, all necessary assets, and the tool’s user manual on GitHub. Try to see how it works in greater detail or adapt the code for your own game project.

- To understand more about our research, read our paper that we presented at the CHI PLAY 2020 Conference: “Artificial Players in the Design Process: Developing an Automated Testing Tool for Game Level and World Design” (PDF) by Samantha Stahlke, Atiya Nova, and Pejman Mirza-Babaei. You can also watch our video presentation from the conference.

- For an excellent perspective on how AI connects to game development, read Julian Togelius’s book Playing Smart: On Games, Intelligence, and Artificial Intelligences.

- To learn about a variety of insights from more than 40 experts on UX research in games,Games User Research is a must read. This book, edited by Anders Drachen, Pejman Mirza-Babaei, and Lennart E. Nacke’s, compiles these insights into an overview of design principles that have been developed over many years.

Pejman Mirza-Babaei is a Games UX consultant, author, and professor. He is known for his books, The Game Designer's Playbook (2022) and Games User Research (2018). As the former UX Research Director at Execution Labs (Montréal, Canada), he worked on the pre- and post-release evaluation of over 25 commercial games.